8.1 – The Scientific Method

Now that we are officially covering inferential statistics, it is important to set the stage by discussing the scientific method. Many of the strategies that you will learn in this chapter are best understood within the conditions of the scientific method because sometimes the strategies will occasionally feel almost counter-intuitive. And that is not by chance. The scientific method is purposely set up to minimize many of the negative impacts that result from the typical ways that humans think. This, I will argue, is why the scientific method has been such an important and impactful method to search for the "truth."

What is the Scientific Method?

Definitionally, the scientific method is an empirical method, or strategy, to discover facts about the world, emphasizing careful observation, making and testing hypotheses, and sharing results within a scientific community. It employs a strong skepticism to minimize errors due to common cognitive biases. In order to better understand the scientific method, let's deconstruct it and explore some significant features that make it so useful and effective:

- It is a method, or process, not a destination.

- It dramatically curtails the impact of cognitive biases that can lead to wrong conclusions.

- It requires the use of hypotheses, or predictions, that can then be tested.

- The testing process is focused on disproof, rather than trying to prove things.

- It requires falsifiability in order to ensure that testing can provide useable feedback on our hypotheses and theories.

- Finally, scientific conclusions are a part of a continual process and thus are tentative, and may change with new evidence or approaches.

Method

First off, it is important to recognize that the scientific method is a method or way of going about trying to discover facts or get closer to the "truth." Too often, however, when people refer to "science" they tend to focus only on the people who do science, or "scientists," meaning that science is anything done in a lab while wearing a white lab coat. But that does not make what those people are doing necessarily "science." Technically, when people talk about "science" they are really talking about the scientific method being applied to answer a question. In fact, anyone can do "science" if they follow the procedures of the scientific method.

It can also help to understand that there are other "methods" for trying to discover facts or get closer to the "truth." One way to think about this is to ask yourself, "what are some things that I believe to be true?" You might come up with a list that looks something like this:

- The sky is blue.

- The sun rises in the east.

- I am hungry.

- I am thinking about the question, "what are some things that I believe to be true?"

- Gravity exists.

- The earth orbits the sun.

- Social media makes people more depressed.

- Bigfoot exists.

Next, ask yourself, "how did I come to believe these things are true?" It is here where we can start to categorize these answers into various methods.

Let's take the first 3 "truths" on the list above. Many people would explain that these are "true" because you can see, or observe them. We call this the "empirical method" or the "method of empiricism." This method simply derives facts or "truths" from observations. The benefits of this method involve the fact that it can be directly experienced and it can be very quick sometimes (e.g., look at the sky - is it blue?). The costs involve the fact that people can observe different things in different situations or times, or two people can see the same thing differently. We also can be fooled by what we see, such as with visual illusions.

The 4th "truth" on the list above might be explained as being observed or experienced, which would be the empirical method, but it also is similar to the "method of rationalism." This method simply applies logic and rational thought to derive "truths." The famous philosopher, Renee Descartes, suggested that in order to find "truths" you need to start with rigorous doubt about truths. Then you find something that is undeniably true and use logic to come up with new truths. One of the undeniable truths he was able to discern was that he was thinking (thinking about what is undeniably true). Thus, if that was "true" then what "truth" could logically follow? That's where he came up with the Latin phrase, "cogito, ergo sum," which means, "I think, therefore, I am." In other words, he was able to determine that he was thinking, and if he was thinking, then logic tells him that he must exist because he wouldn't be able to think if he didn't exist. One of the benefits of this method is that it follows logical rules and thus can be deconstructed by others to look for potential flaws. It also has the benefit of starting from a position that assumes a healthy dose of doubt or skepticism. One of the costs is that, as you see with Descartes, it may not lead to a plethora of new "truths." And it's also possible that fact or "truth" has a logic to it that we just can't see yet.

The 5th and 6th truths on our list sound very scientific, and many people would argue that they used science to come to these truths. However, it is probably more accurate to describe these truths as coming from the "method of authority." In other words, we might know these to be true because someone who we view as an authority told us that they were true. One of the benefits of this method is that it allows us to expand human knowledge quickly, without having to start at square one every time. For example, you yourself don't need to "discover" things like integers or the concept of zero. Instead, you can simply learn about them in school relatively quickly (especially when compared to the centuries it took humans to discover them in the first place). Each human generation is essentially able to take the knowledge from the previous generation and use their time on the planet to expand that knowledge. The cost of this method is the fact that sometimes "authorities" can be wrong, or we have a hard time discerning who the experts are to which we should be listening.

Application: The Method of Authority and the COVID Pandemic Response

One of the things that stands out about the U.S. response to the COVID pandemic, especially during the first year, was that in October 2019, only months before COVID exploded on the world stage, the U.S. was ranked #1 out of 195 countries in the world on the Global Health Security Index by the Johns Hopkins Center for Health Security.[1] This top ranking was due in large part to a small group of experts in epidemiology and public health that had developed a pandemic response plan during the early 2000's after the president at the time, George W. Bush, had read the book, The Great Influenza by John M. Barry.[2] In fact, many countries around the world began to copy the U.S. pandemic response plan because they didn't have one themselves.

Then fast-forward to the summer of 2020, only a half-year after the #1 ranking, and the U.S. infection and death rate from the COVID pandemic was one of the worst in the world. How did the U.S. go from being the most prepared for a pandemic to being the most devastated by a pandemic? It appears that it was due to putting trust in the wrong "authorities." The pandemic plan prepared by experts was initially not followed in the U.S. and people were soon looking to "arm-chair" epidemiologists and virologists, people with no expertise or training in disciplines that were directly related to the pandemic. Not surprisingly, other countries that had copied the U.S. pandemic preparedness plan were much more successful at minimizing the devastating impacts of the virus because they followed the experts' plan.

The method of authority can be a very useful tool to understand facts and get closer to the "truth," but it relies on two important things:

- Identifying true experts

- The experts minimizing their biases

And this brings us to the scientific method as a strategy for discerning the "truth." In a way, the scientific method combines many of the previous strategies because:

- Coming up with a research question often comes from intuitive feelings about the world (method of intuition)

- Researchers make every attempt to use "objective" observations or measurements (method of empiricism).

- Research theories and hypotheses need to be logical (method of rationalism)

- Research takes place in a community of scientists who share their findings so they can challenge and learn from one another (method of authority)

However, there are some key additions that make the scientific method so effective, and in order to understand these additions, it is important to recognize that all humans have some pretty serious cognitive biases that lead them to believe in things that are simply wrong.

Cognitive Biases

Humans evolved to be able to have much larger brains (in proportion to body size) than most animals. And these brains require a lot of energy to run, so it would make sense that humans developed "shortcuts" that help minimize the amount of cognitive load. These shortcuts are often called "heuristics," which is a method or calculation that can result in a decent, though not perfect, answer most of the time. They are a lot like a "rule of thumb." For example, let's say you are walking down a trail and you hear rustling in the brush and then you get attacked by a small mountain lion. You survive but it was a scary experience. Chances are that the next time you hear a rustling in the brush while walking down a trail, you will quickly ready yourself to fight off an attack. While we can see this above example as a reasonable response, it can also frequently lead to unnecessary reactions if there are very few mountain lions and, 999 times out of 1000, the rustling is simply due to non-dangerous animals or simply the wind. What has evolved is a "cognitive bias." Our brain reacts quickly without any substantial consideration and is biased toward a particular interpretation of the rustling in the brush.

It might seem that these biases are small and inconsequential. However, they are a serious obstacle to our search for facts and the "truth." In a lot of ways, humans are pretty susceptible to being fooled. We've already mentioned optical illusions as examples of incorrect perceptions, but another example involves magical illusions. Many magicians or illusionists fool us because they take advantage of our many cognitive biases. Sometimes they can use the fact that we can't pay attention to everything so they simply distract us and get us to look "over here" while they do something surreptitiously "over there." Sometimes they play with the fact that our brain will often create incorrect memories so that our experiences make more sense.

Another simple trick, however, is for the magician to not tell their audience what they are going to do. For example, let's say that a magician has you roll a six-sided die (the singular of "dice"):

And like the die in our picture, it comes up a "six." Then the magician pulls a folded piece of paper and asks you to unfold it and read what it says. When you open up the paper it very clearly has handwriting that says, "you will roll a 6." At this point, some of you might be impressed given that there is only a [latex]P (roll a 6) = \frac {\test{number of sides with 6}}{\text{total number of sides}} = \frac {1}{6}[/latex] = 0.167 probability or a 16.7% chance of the magician guessing correctly, which is relatively low.

However, there is a pretty simple explanation for this "magic:" the magician has a total of six folded-up pieces of paper that they could hand to you, and each one says you will roll a different side of the die. Thus, all the magician has to do is remember where they all are and then hand you the correct one depending on the result of your roll.

This brings us to the importance of making a prediction, or stating a hypothesis.

Hypotheses

A final important component of the scientific method is that it requires researchers to make then test hypotheses. For the above magic example, if we required the magician to hypothesize about the magic trick, they would have to tell us exactly what they were going to do. As such, they might say: "I'm going to have you roll this die and we will see what comes up. Then I'm going to reach into the right-hand pocket of my trousers and pull out a sheet of paper and hand it to you. Then you will open the paper and read it."

If they did this, then the trick wouldn't be able to work if they keep the other five slips of paper in a different part of their outfit. But if they put all six slips in the same pocket, most people would then want to have them empty that pocket at the end of the trick and, again, the trick would be spoiled as all of the other slips of paper are revealed.

Disproof

One particular cognitive bias that is particularly problematic is called the confirmation bias. The confirmation bias leads people to simply try to "confirm" their previously held beliefs. It involves only looking for or paying attention to evidence that supports the prior belief. For example, if I believe that all psychology students are caring, I will probably notice and remember psychology students who are caring. Undoubtedly, I will also run into psychology students who are not caring. Confirmation bias will lead me to not notice that this evidence disproves my belief, or to simply play this student off as an outlier or an exception that, somehow in my biased mind, has no bearing on my belief even though it actually disproves it.

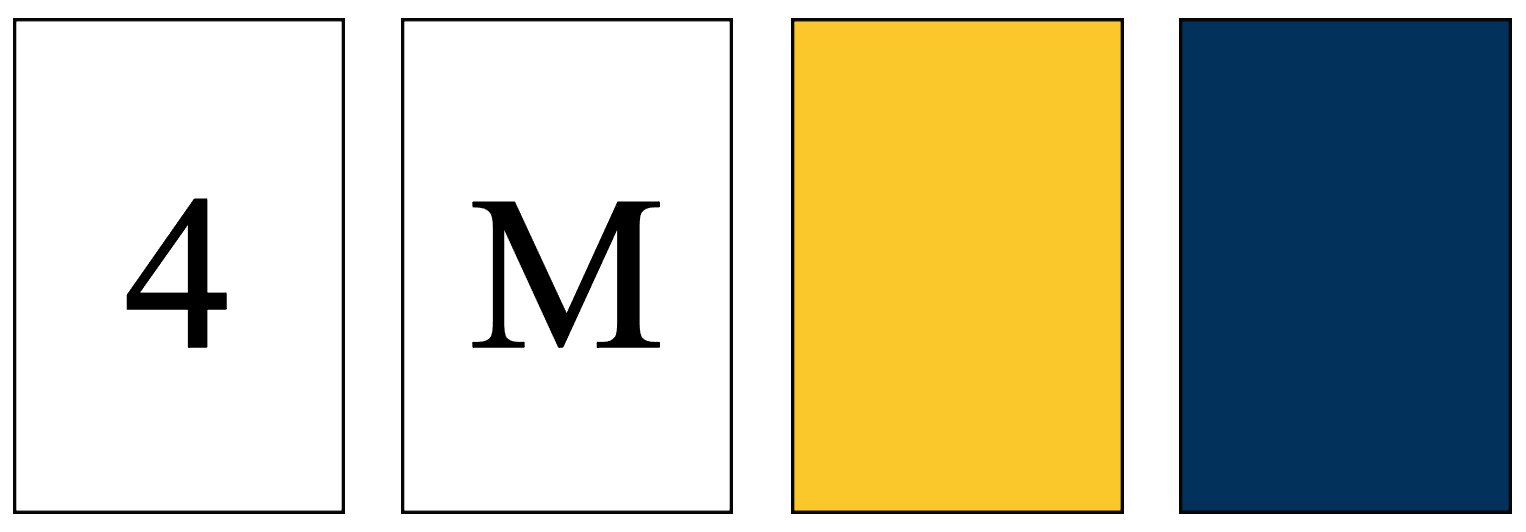

In a seminal research study on the confirmation bias, now referred to as the "Wason selection task," psychologist Peter Wason (1968)[3] showed participants four cards that have a colored side and a side with either a number or letter, such as:

They are then asked to identify which card(s) they would want to turn over to test the rule that "if a card has a number on one side then it must be yellow on the opposite side?"

The most common answer was to turn over the "4" and the "yellow" card, which is incorrect. It turns out that less than 10% of the original research participants gave the correct answer.[4] This finding was also replicated in 1993.[5]

Here are the possible results of turning over each card:

- 4 - If the other side is yellow, it violates the rule.

- M - If the other side is either yellow or blue, it does not violate the rule.

- Yellow - If the other side is either a number or a letter, it does not violate the rule.

- Blue - If the other side is a number, it violates the rule.

Based on the above outcomes, the only cards that ultimately provide us with information that could potentially help us test the rule are the "4" and the "blue" card. Notice that the correct cards to turn over involve a violation of the rule, or in other words, they would disprove the rule.

The reason this study is associated with the confirmation bias partly has to do with most people's tendency to choose the "yellow" card. Turning over the "yellow" card won't give us any information about our rule because the results don't have to do with the rule at all. One way to think of it is to consider a rule that states: "if A, then B." This rule does not then also mean: "if B, then A." As a result, if we see B, and then we see A, this does not actually tell us anything about the original rule ("if A, then B"). So another way to think of it is that the rule, "if the card has a number then the other side must be yellow," does not necessarily result in, "if the card is yellow then the other side must be a number."

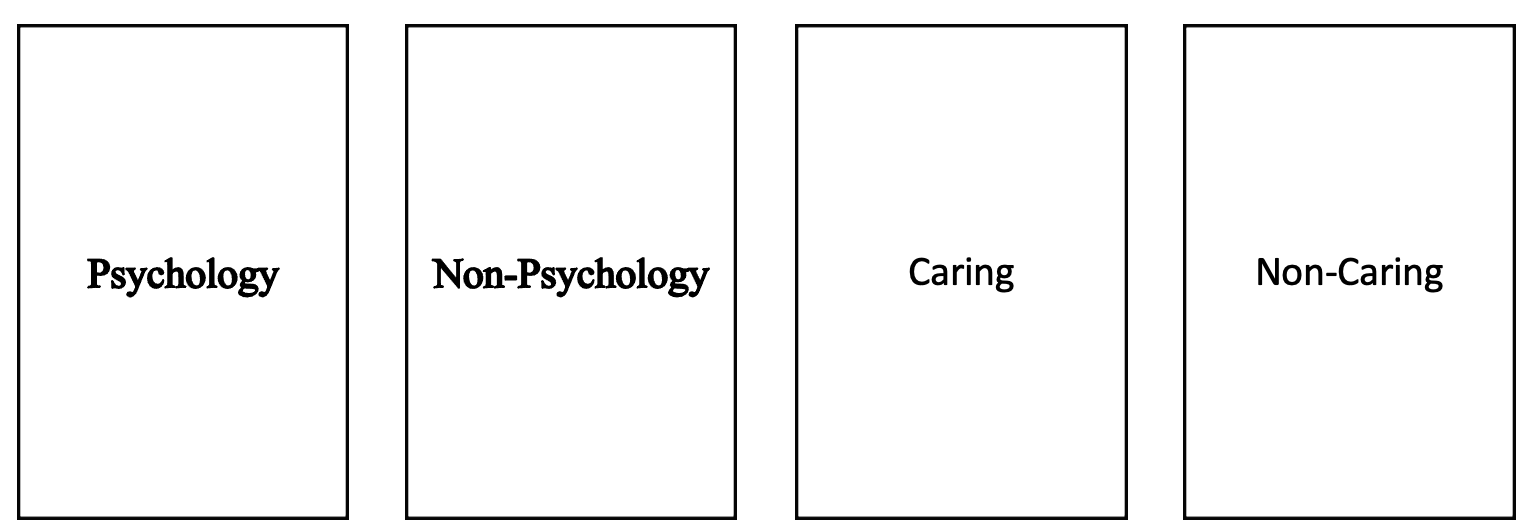

Interestingly, while the vast majority of people struggle with this card exercise, they don't tend to make the same mistake with less abstract ideas. For example, let's go back to my original example of confirmation bias with the rule: "all psychology students are caring." Think of it as cards, where on one side of the card you have "psychology student" or "non-psychology student," and the other side is "caring" or "non-caring." Which of these four cards would you turn over to test the rule that all psychology students are caring?

Hopefully, it is now easier to see that you would want to flip the "psychology" and the "non-caring" cards. Finding out whether someone who is caring is either a psychology or non-psychology student doesn't really tell you anything about whether "all psychology majors are caring." There are lots of caring people who are psychology and non-psychology majors. But finding out whether someone who is not caring is a psychology major does tell us that our belief that "all psychology majors are caring" is wrong because we just found one who isn't.

The human tendency toward confirmation bias has resulted in human history being full of examples where we believed things that were simply not true. And if you asked people during that time about those false beliefs, they would have all sorts of good-sounding reasons and evidence for their beliefs. The problem was that they spent their energies trying to support or prove their beliefs, rather than trying to disprove them.

Falsifiability

In the end, this leads us to a very important component of the scientific method: trying to disprove a hypothesis. As the above examples indicate, finding evidence in support of your belief does not prove that it is correct. The only way we can actually test our beliefs or hypotheses effectively is to try to "falsify" them. This means that the only good beliefs, hypotheses, or theories are the ones that can't be falsified or disproved.

The scientific method requires that all scientific theories or hypotheses need to be falsifiable. Falsifiability refers to the possibility of being able to disprove or "falsify" a statement, hypothesis, or theory. For example, let's take our idea that "all psychology students are caring." If we find just one non-caring psychology student, that would falsify or disprove that statement.

It is important to recognize that "falsifiable" does not refer to a statement simply being false or wrong. Instead, it refers to the possible outcomes that could potentially result from a test of the statement, with one of the outcomes potentially being a result that goes completely against, or falsifies the statement, such as the possibility of finding a psychology student who is not caring. Let's use a different statement to explore this concept: "all psychology students are non-human." Many people will feel like this statement is not falsifiable because it logically seems false (students tend to be human). However, it is still a falsifiable statement because we could test it by sampling some psychology students (which is a test of the statement) and it is possible that we would find a psychology student who is human. This would falsify the statement. Similarly, what about the statement: "all psychology students are human?" Many people will feel like this statement is also not falsifiable because they can't imagine sampling psychology students and finding a non-human. However, if you did find a non-human in your psychology student sample, that would technically falsify the statement, and we are "able to falsify" the statement, and thus it is a falsifi-able statement.

So what would constitute a non-falsifiable statement? One is the statement that "faeries exist." If we were to go about trying to test this statement, there is no result that would technically falsify or disprove the statement. Someone might suggest that "I have never seen a faerie" or "we've never captured a faerie" However, these facts don't mean that faeries don't exist. In other words, the statement is non-falsifiable, and it's due to the way the statement is written. It just doesn't allow for disproof. However, if we tweaked the statement to be "faeries do not exist," we now have a statement that can be falsified by simply finding a faerie. It doesn't matter that it is very unlikely that we could find a faerie; it is about the fact that if we did find a faerie, we have technically falsified the statement.

Application: Falsifiability and the Pseudoscience of The Law of Attraction

In 2006, the movie The Secret and a corresponding book were released and sold millions of copies. The Secret extols the "law of attraction," a theory that states one's thoughts and feelings attract events and experiences, such that one can change one's life by thinking it into existence. It also suggests that the law of attraction has been used by successful people for generations to impact their success and that it has been hidden or kept secret from most people.

In the movie, which uses a documentary style, the law of attraction is made to look like a scientific theory through interviews with scientists and discussions of real scientific concepts that in many ways have little directly to do with the law of attraction. However, the law of attraction is technically not a scientific theory because, for one, it is not falsifiable. If someone wanted to personally test the law of attraction, they could start to repeatedly think about an outcome that they want for their life, such as finding the love of their life or getting a particular job. What if after doing all of this concentrated and directed thinking (and supposedly attracting certain things) they don't find the love of their life or they don't get the job? This would seem to disprove the law of attraction. However, proponents of the law of attraction would argue that they must not have thought about these outcomes in the "correct" way.

As you can hopefully start to see, the law of attraction is not proposed in a way that is falsifiable. Any outcome could, especially ones that result in the person not attracting what they want, would be written off as being the result of the person doing things incorrectly. Ultimately, there would then never be a way to disprove the law of attraction, and thus it is not scientific. We call these types of theories or hypotheses, pseudoscience because they are similar to science, and often try to leech off the credibility of science, by using scientists, scientific words, scientific theories, etc. But ultimately, when looked at closely, these theories and hypotheses violate the assumptions of science, including falsifiability.

Tentative

A final feature of the scientific method, and one that is underrecognized by even strong proponents of science, is the fact that scientific conclusions are tentative, or provisional. This highlights the fact that science involves a cyclical and iterative process. Imagine the scientific method as a circle, much like a wheel. Each scientific study, or each application of the scientific method, involves a full revolution of the wheel. If that wheel is on the ground, then with each revolution the wheel moves forward, and if we want to keep moving forward, we need to keep revolving the wheel. Likewise, applying the scientific process to any given research question will require multiple research studies (turns of the wheel), with each one getting close and closer to the "truth."

In other words, a single research study is only a "first step" toward getting closer to answering a research question. In fact, it is helpful to understand scientific studies and their conclusions as "guesses" about the research question.

And this can be somewhat alarming to many people because there is a belief in society that if a scientific research study shows that, for example, Pain Reliever A reduces pain for individuals who suffer from migraines, then Pain Reliever A works. However, that is not the case becauseit is simply a tentative conclusion, based upon the evidence of that one study. It doesn't actually mean that Pain Reliever A actually works. The conclusion of any research study is simply a "guess" and has the potential to be wrong (the people in the study showed relief of their pain because of something else, but not Pain Reliever A).

This, however, doesn't mean that scientific research studies are pointless and "mindless guesses." These studies use the strict processes of the scientific method, which help to minimize (but not fully remove) other possible explanations. Instead, it would be better to think of scientific conclusions as "educated guesses." They are essentially our best guess about the facts and the "truth," and they are always eligible to be altered, dismissed, or amended by future scientific research studies.

- Cameron, E. E., Nuzzo, J. B., & Bell, J. A. (2019). Global health security index: building collective action and accountability. Baltimore: Johns Hopkins, Bloomberg School of Public Health. https://www.ghsindex.org/wp-content/uploads/2021/11/2019-Global-Health-Security-Index.pdf ↵

- Barry, J. M. (2020). The great influenza: The story of the deadliest pandemic in history. Penguin; UK. ↵

- Wason, P. C. (1968). Reasoning about a rule. Quarterly journal of experimental psychology, 20(3), 273-281. ↵

- Wason, P. C. (1977). "Self-contradictions". In Johnson-Laird, P. N.; Wason, P. C. (eds.). Thinking: Readings in cognitive science. Cambridge: Cambridge University Press ↵

- Evans, Jonathan St. B. T.; Newstead, Stephen E.; Byrne, Ruth M. J. (1993). Human Reasoning: The Psychology of Deduction. Psychology Press. ↵

Statistical processes that help researchers explore a research question by using probability to infer generalizations about the population from the data and results of a sample.

An empirical method, or strategy, to discover facts about the world emphasizing careful observation, making and testing hypotheses, and sharing results within a scientific community. It employs a strict skepticism to minimize errors due to common cognitive biases.

A cognitive bias that leads people to focus only on trying to confirm their prior beliefs.

The ability to disprove, or falsify, a statement, hypothesis, or theory.

Feedback/Errata