Psychologists striving to understand the human mind may study the nervous system. Learning how the body’s cells and organs function can help us understand the biological basis of human psychology. The nervous system is composed of two basic cell types: glial cells (also known as glia) and neurons. Glial cells are traditionally thought to play a supportive role to neurons, both physically and metabolically. Glial cells provide scaffolding on which the nervous system is built, help neurons line up closely with each other to allow neuronal communication, provide insulation to neurons, transport nutrients, and waste products, and mediate immune responses. For years, researchers believed that there were many more glial cells than neurons; however, more recent work from Suzanna Herculano-Houzel’s laboratory has called this long-standing assumption into question and has provided important evidence that there may be a nearly 1:1 ratio of glial cells to neurons. This is important because it suggests that human brains are more similar to other primate brains than previously thought (Azevedo et al., 2009; Hercaulano-Houzel, 2012; Herculano-Houzel, 2009). Neurons, on the other hand, serve as interconnected information processors that are essential for all of the tasks of the nervous system. This section briefly describes the structure and function of neurons.

Imagine trying to string words together into a meaningful sentence without knowing the meaning of each word or its function (i.e., Is it a verb, a noun, or an adjective?). In a similar fashion, to appreciate how groups of cells work together in a meaningful way in the brain as a whole, we must first understand how individual cells in the brain function. Much like words, brain cells, called neurons, have an underlying structure that provides the foundation for their functional purpose. Have you ever seen a neuron? Did you know that the basic structure of a neuron is similar whether it is from the brain of a rat or a human? How do the billions of neurons in our brain allow us to do all the fun things we enjoy, such as texting a friend, cheering on our favorite sports team, or laughing?

Neuron Structure

Neurons are the central building blocks of the nervous system, 100 billion strong at birth. Like all cells, neurons consist of several different parts, each serving a specialized function (Figure 3.8). A neuron’s outer surface is made up of a semipermeable membrane. This membrane allows smaller molecules and molecules without an electrical charge to pass through it while stopping larger or highly charged molecules.

The nucleus of the neuron is located in the soma or cell body. The soma has branching extensions known as dendrites. The neuron is a small information processor, and dendrites serve as input sites where signals are received from other neurons. These signals are transmitted electrically across the soma and down a major extension from the soma known as the axon, which ends at multiple terminal buttons. The terminal buttons contain synaptic vesicles that house neurotransmitters, the chemical messengers of the nervous system.

Axons range in length from a fraction of an inch to several feet. In some axons, glial cells form a fatty substance known as the myelin sheath, which coats the axon and acts as an insulator, increasing the speed at which the signal travels. The myelin sheath is not continuous and there are small gaps that occur down the length of the axon. These gaps in the myelin sheath are known as the Nodes of Ranvier. The myelin sheath is crucial for the normal operation of the neurons within the nervous system: the loss of the insulation it provides can be detrimental to normal function. To understand how this works, let’s consider an example. PKU, a genetic disorder discussed earlier, causes a reduction in myelin and abnormalities in white matter cortical and subcortical structures. The disorder is associated with a variety of issues including severe cognitive deficits, exaggerated reflexes, and seizures (Anderson & Leuzzi, 2010; Huttenlocher, 2000). Another disorder, multiple sclerosis (MS), an autoimmune disorder, involves a large-scale loss of the myelin sheath on axons throughout the nervous system. The resulting interference in the electrical signal prevents the quick transmittal of information by neurons and can lead to a number of symptoms, such as dizziness, fatigue, loss of motor control, and sexual dysfunction. While some treatments may help to modify the course of the disease and manage certain symptoms, there is currently no known cure for multiple sclerosis.

In healthy individuals, the neuronal signal moves rapidly down the axon to the terminal buttons, where synaptic vesicles release neurotransmitters into the synaptic cleft (Figure 3.9). The synaptic cleft is a very small space between two neurons and is an important site where communication between neurons occurs. Once neurotransmitters are released into the synaptic cleft, they travel across it and bind with corresponding receptors on the dendrite of an adjacent neuron. Receptors, proteins on the cell surface where neurotransmitters attach, vary in shape, with different shapes “matching” different neurotransmitters.

How does a neurotransmitter “know” which receptor to bind to? The neurotransmitter and the receptor have what is referred to as a lock-and-key relationship. Specific neurotransmitters fit specific receptors similar to how a key fits a lock. The neurotransmitter binds to any receptor that it fits.

The action potential is an all-or-none phenomenon. In simple terms, this means that an incoming signal from another neuron is either sufficient or insufficient to reach the threshold of excitation. There is no in-between, and there is no turning off an action potential once it starts. Think of it as sending an email or a text message. You can think about sending it all you want, but the message is not sent until you hit the send button. Furthermore, once you send the message, there is no stopping it.

Because it is all or none, the action potential is recreated, or propagated, at its full strength at every point along the axon. Much like the lit fuse of a firecracker, it does not fade away as it travels down the axon. It is this all-or-none property that explains the fact that your brain perceives an injury to a distant body part like your toe as equally painful as one to your nose.

As noted earlier, when the action potential arrives at the terminal button, the synaptic vesicles release their neurotransmitters into the synaptic cleft. The neurotransmitters travel across the synapse and bind to receptors on the dendrites of the adjacent neuron, and the process repeats itself in the new neuron (assuming the signal is sufficiently strong to trigger an action potential). Once the signal is delivered, excess neurotransmitters in the synaptic cleft drift away, are broken down into inactive fragments or are reabsorbed in a process known as reuptake. Reuptake involves the neurotransmitter being pumped back into the neuron that released it, in order to clear the synapse (Figure 3.12). Clearing the synapse serves both to provide a clear “on” and “off” state between signals and to regulate the production of neurotransmitter (full synaptic vesicles provide signals that no additional neurotransmitters need to be produced).

Neurotransmitters and Drugs

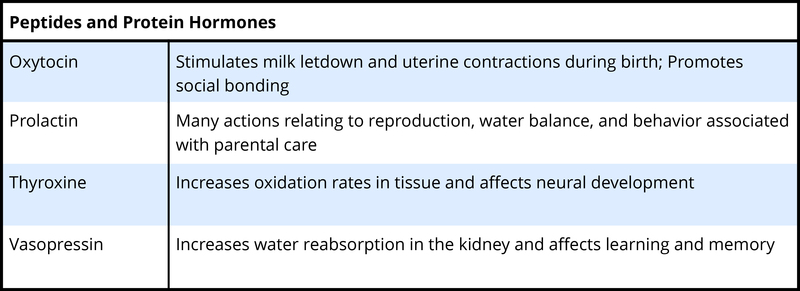

There are several different types of neurotransmitters released by different neurons, and we can speak in broad terms about the kinds of functions associated with different neurotransmitters (Table 3.1). Much of what psychologists know about the functions of neurotransmitters come from research on the effects of drugs in psychological disorders. Psychologists who take a biological perspective and focus on the physiological causes of behavior assert that psychological disorders like depression and schizophrenia are associated with imbalances in one or more neurotransmitter systems. In this perspective, psychotropic medications can help improve the symptoms associated with these disorders. Psychotropic medications are drugs that treat psychiatric symptoms by restoring neurotransmitter balance.

| Major Neurotransmitters and How They Affect Behavior | ||

|---|---|---|

| Neurotransmitter | Involved in | Potential Effect on Behavior |

| Acetylcholine | Muscle action, memory | Increased arousal, enhanced cognition |

| Beta-endorphin | Pain, pleasure | Decreased anxiety, decreased tension |

| Dopamine | Mood, sleep, learning | Increased pleasure, suppressed appetite |

| Gamma-aminobutyric acid (GABA) | Brain function, sleep | Decreased anxiety, decreased tension |

| Glutamate | Memory, learning | Increased learning, enhanced memory |

| Norepinephrine | Heart, intestines, alertness | Increased arousal, suppressed appetite |

| Serotonin | Mood, sleep | Modulated mood, suppressed appetite |

Psychoactive drugs can act as agonists or antagonists for a given neurotransmitter system. Agonists are chemicals that mimic a neurotransmitter at the receptor site. An antagonist, on the other hand, blocks or impedes the normal activity of a neurotransmitter at the receptor. Agonists and antagonists represent drugs that are prescribed to correct the specific neurotransmitter imbalances underlying a person’s condition. For example, Parkinson’s disease, a progressive nervous system disorder, is associated with low levels of dopamine. Therefore, a common treatment strategy for Parkinson’s disease involves using dopamine agonists, which mimic the effects of dopamine by binding to dopamine receptors.

Certain symptoms of schizophrenia are associated with overactive dopamine neurotransmission. The antipsychotics used to treat these symptoms are antagonists for dopamine—they block dopamine’s effects by binding its receptors without activating them. Thus, they prevent dopamine released by one neuron from signaling information to adjacent neurons.

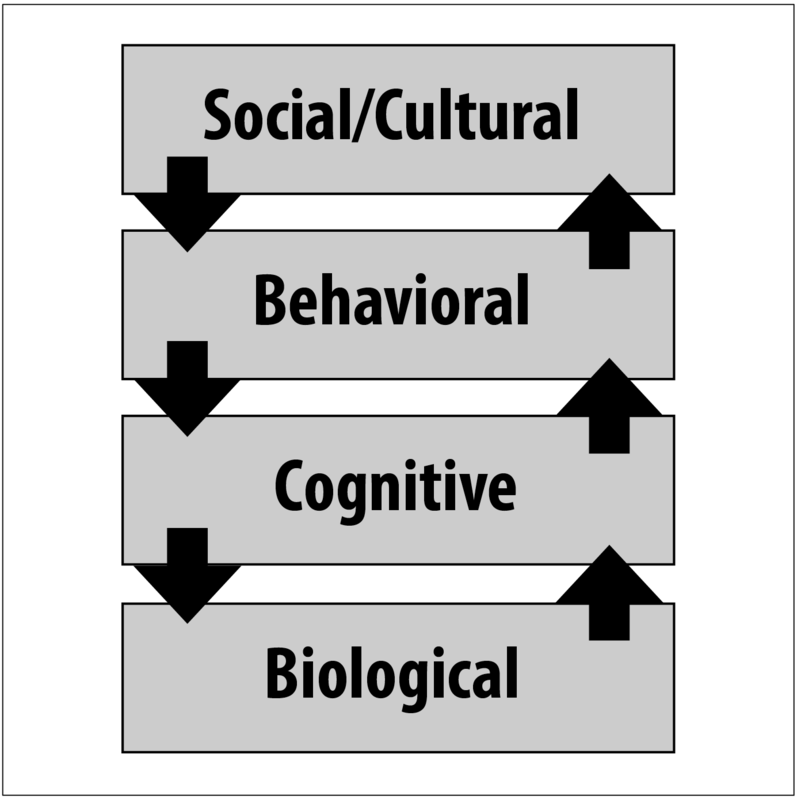

In contrast to agonists and antagonists, which both operate by binding to receptor sites, reuptake inhibitors prevent unused neurotransmitters from being transported back to the neuron. This allows neurotransmitters to remain active in the synaptic cleft for longer durations, increasing their effectiveness. Depression, which has been consistently linked with reduced serotonin levels, is commonly treated with selective serotonin reuptake inhibitors (SSRIs). By preventing reuptake, SSRIs strengthen the effect of serotonin, giving it more time to interact with serotonin receptors on dendrites. Common SSRIs on the market today include Prozac, Paxil, and Zoloft. The drug LSD is structurally very similar to serotonin, and it affects the same neurons and receptors as serotonin. Psychotropic drugs are not instant solutions for people suffering from psychological disorders. Often, an individual must take a drug for several weeks before seeing improvement, and many psychoactive drugs have significant negative side effects. Furthermore, individuals vary dramatically in how they respond to the drugs. To improve chances for success, it is not uncommon for people receiving pharmacotherapy to undergo psychological and/or behavioral therapies as well. Some research suggests that combining drug therapy with other forms of therapy tends to be more effective than any one treatment alone (for one such example, see March et al., 2007).

Learning Objectives

By the end of this section, you will be able to:

- Describe the difference between the central and peripheral nervous systems

- Explain the difference between the somatic and autonomic nervous systems

- Differentiate between the sympathetic and parasympathetic divisions of the autonomic nervous system

- Distinguish between gray and white matter of the cerebral hemispheres.

The mammalian nervous system is a complex biological organ, which enables many animals including humans to function in a coordinated fashion. The original design of this system is preserved across many animals through evolution; thus, adaptive physiological and behavioral functions are similar across many animal species. Comparative study of physiological functioning in the nervous systems of different animals lend insights to their behavior and their mental processing and make it easier for us to understand the human brain and behavior. In addition, studying the development of the nervous system in a growing human provides a wealth of information about the change in its form and behaviors that result from this change. The nervous system is divided into central and peripheral nervous systems, and the two heavily interact with one another. The peripheral nervous system controls volitional (somatic nervous system) and nonvolitional (autonomic nervous system) behaviors using cranial and spinal nerves. The central nervous system is divided into forebrain, midbrain, and hindbrain, and each division performs a variety of tasks; for example, the cerebral cortex in the forebrain houses sensory, motor, and associative areas that gather sensory information, process information for perception and memory, and produce responses based on incoming and inherent information. To study the nervous system, a number of methods have evolved over time; these methods include examining brain lesions, microscopy, electrophysiology, electroencephalography, and many scanning technologies.

Evolution of the Nervous System

Many scientists and thinkers (Cajal, 1937; Crick & Koch, 1990; Edelman, 2004) believe that the human nervous system is the most complex machine known to man. Its complexity points to one undeniable fact—that it has evolved slowly over time from simpler forms. Evolution of the nervous system is intriguing not because we can marvel at this complicated biological structure, but it is fascinating because it inherits a lineage of a long history of many less complex nervous systems (Figure 1), and it documents a record of adaptive behaviors observed in life forms other than humans. Thus, evolutionary study of the nervous system is important, and it is the first step in understanding its design, its workings, and its functional interface with the environment.

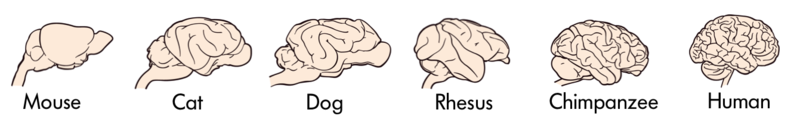

The brains of some animals, like apes, monkeys, and rodents, are structurally similar to humans (Figure 1), while others are not (e.g., invertebrates, single-celled organisms). Does anatomical similarity of these brains suggest that behaviors that emerge in these species are also similar? Indeed, many animals display behaviors that are similar to humans, e.g., apes use nonverbal communication signals with their hands and arms that resemble nonverbal forms of communication in humans (Gardner & Gardner, 1969; Goodall, 1986; Knapp & Hall, 2009). If we study very simple behaviors, like physiological responses made by individual neurons, then brain-based behaviors of invertebrates (Kandel & Schwartz, 1982) look very similar to humans, suggesting that from time immemorial such basic behaviors have been conserved in the brains of many simple animal forms and in fact are the foundation of more complex behaviors in animals that evolved later (Bullock, 1984).

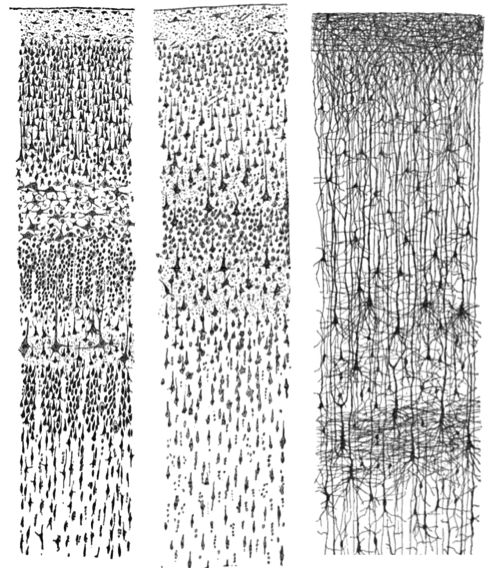

Even at the micro-anatomical level, we note that individual neurons differ in complexity across animal species. Human neurons exhibit more intricate complexity than other animals; for example, neuronal processes (dendrites) in humans have many more branch points, branches, and spines.

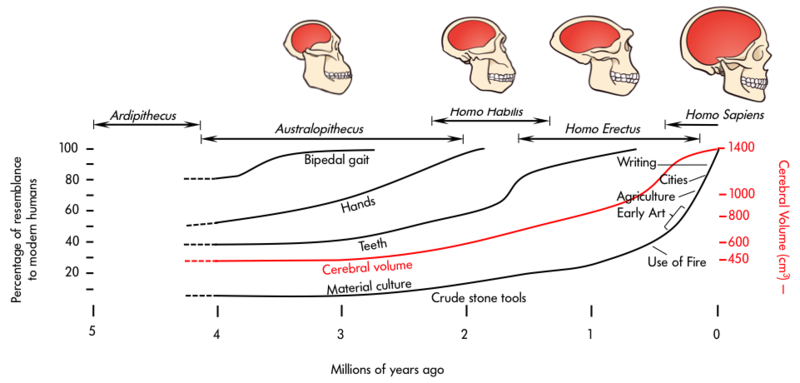

Complexity in the structure of the nervous system, both at the macro- and micro-levels, give rise to complex behaviors. We can observe similar movements of the limbs, as in nonverbal communication, in apes and humans, but the variety and intricacy of nonverbal behaviors using hands in humans surpasses apes. Deaf individuals who use American Sign Language (ASL) express themselves in English nonverbally; they use this language with such fine gradation that many accents of ASL exist (Walker, 1987). Complexity of behavior with increasing complexity of the nervous system, especially the cerebral cortex, can be observed in the genus Homo (Figure 2). If we compare sophistication of material culture in Homo habilis (2 million years ago; brain volume ~650 cm3) and Homo sapiens (300,000 years to now; brain volume ~1400 cm3), the evidence shows that Homo habilis used crude stone tools compared with modern tools used by Homo sapiens to erect cities, develop written languages, embark on space travel, and study her own self. All of this is due to increasing complexity of the nervous system.

What has led to the complexity of the brain and nervous system through evolution, to its behavioral and cognitive refinement? Darwin (1859, 1871) proposed two forces of natural and sexual selection as work engines behind this change. He prophesied, “psychology will be based on a new foundation, that of the necessary acquirement of each mental power and capacity by gradation” that is, psychology will be based on evolution (Rosenzweig, Breedlove, & Leiman, 2002).

Development of the Nervous System

Where the study of change in the nervous system over eons is immensely captivating, studying the change in a single brain during individual development is no less engaging. In many ways the ontogeny (development) of the nervous system in an individual mimics the evolutionary advancement of this structure observed across many animal species. During development, the nervous tissue emerges from the ectoderm (one of the three layers of the mammalian embryo) through the process of neural induction. This process causes the formation of the neural tube, which extends in a rostrocaudal (head-to-tail) plane. The tube, which is hollow, seams itself in the rostrocaudal direction. In some disease conditions, the neural tube does not close caudally and results in an abnormality called spina bifida. In this pathological condition, the lumbar and sacral segments of the spinal cord are disrupted.

As gestation progresses, the neural tube balloons up (cephalization) at the rostral end, and forebrain, midbrain, hindbrain, and the spinal cord can be visually delineated (day 40). About 50 days into gestation, six cephalic areas can be anatomically discerned (also see below for a more detailed description of these areas).

The progenitor cells (neuroblasts) that form the lining (neuroepithelium) of the neural tube generate all the neurons and glial cells of the central nervous system. During early stages of this development, neuroblasts rapidly divide and specialize into many varieties of neurons and glial cells, but this proliferation of cells is not uniform along the neural tube—that is why we see the forebrain and hindbrain expand into larger cephalic tissues than the midbrain. The neuroepithelium also generates a group of specialized cells that migrate outside the neural tube to form the neural crest. This structure gives rise to sensory and autonomic neurons in the peripheral nervous system.

The Structure of the Nervous System

The mammalian nervous system is divided into central and peripheral nervous systems.

The Peripheral Nervous System

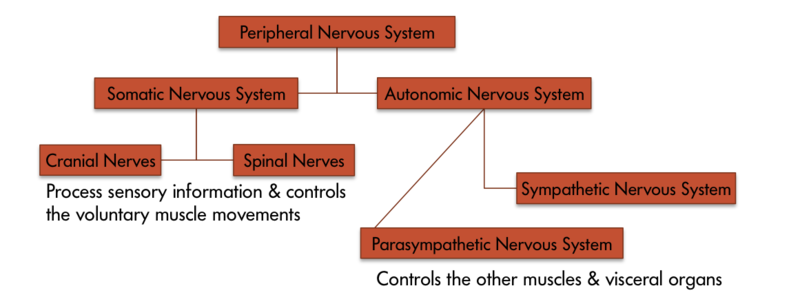

The peripheral nervous system is divided into somatic and autonomic nervous systems (Figure 3). Where the somatic nervous system consists of cranial nerves (12 pairs) and spinal nerves (31 pairs) and is under the volitional control of the individual in maneuvering bodily muscles, the autonomic nervous system also running through these nerves lets the individual have little control over muscles and glands. Main divisions of the autonomic nervous system that control visceral structures are the sympathetic and parasympathetic nervous systems.

At an appropriate cue (say a fear-inducing object like a snake), the sympathetic division generally energizes many muscles (e.g., heart) and glands (e.g., adrenals), causing activity and release of hormones that lead the individual to negotiate the fear-causing snake with fight-or-flight responses. Whether the individual decides to fight the snake or run away from it, either action requires energy; in short, the sympathetic nervous system says “go, go, go.” The parasympathetic nervous system, on the other hand, curtails undue energy mobilization into muscles and glands and modulates the response by saying “stop, stop, stop.” This push–pull tandem system regulates fight-or-flight responses in all of us.

While it is clear that such a response would be critical for survival for our ancestors, who lived in a world full of real physical threats, many of the high-arousal situations we face in the modern world are more psychological in nature. For example, think about how you feel when you have to stand up and give a presentation in front of a roomful of people, or right before taking a big test. You are in no real physical danger in those situations, and yet you have evolved to respond to a perceived threat with the fight or flight response. This kind of response is not nearly as adaptive in the modern world; in fact, we suffer negative health consequences when faced constantly with psychological threats that we can neither fight nor flee. Recent research suggests that an increase in susceptibility to heart disease (Chandola, Brunner, & Marmot, 2006) and impaired function of the immune system (Glaser & Kiecolt-Glaser, 2005) are among the many negative consequences of persistent and repeated exposure to stressful situations. Some of this tendency for stress reactivity can be wired by early experiences of trauma.

Once the threat has been resolved, the parasympathetic nervous system takes over and returns bodily functions to a relaxed state. Our hunter’s heart rate and blood pressure return to normal, his pupils constrict, he regains control of his bladder, and the liver begins to store glucose in the form of glycogen for future use. These restorative processes are associated with the activation of the parasympathetic nervous system.

The Central Nervous System

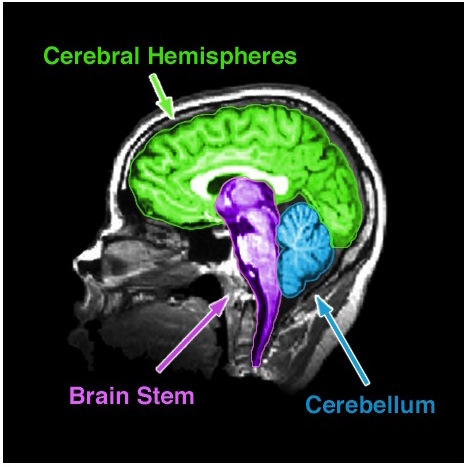

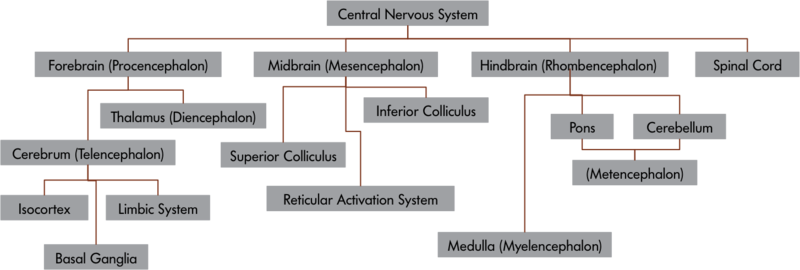

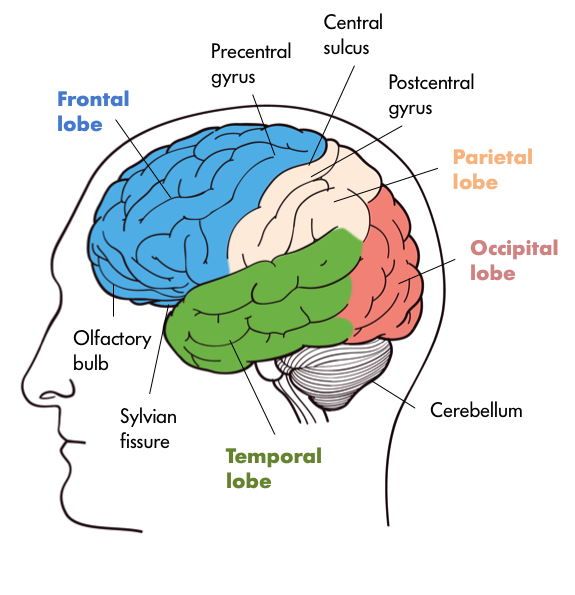

The central nervous system is divided into a number of important parts (see Figure 4), including the spinal cord, each specialized to perform a set of specific functions. Telencephalon or cerebrum is a newer development in the evolution of the mammalian nervous system. In humans, it is about the size of a large napkin and when crumpled into the skull, it forms furrows called sulci (singular form, sulcus). The bulges between sulci are called gyri (singular form, gyrus). The cortex is divided into two hemispheres, and each hemisphere is further divided into four lobes (Figure 5a), which have specific functions. The division of these lobes is based on two delineating sulci: the central sulcus divides the hemisphere into frontal and parietal-occipital lobes and the lateral sulcus marks the temporal lobe, which lies below.

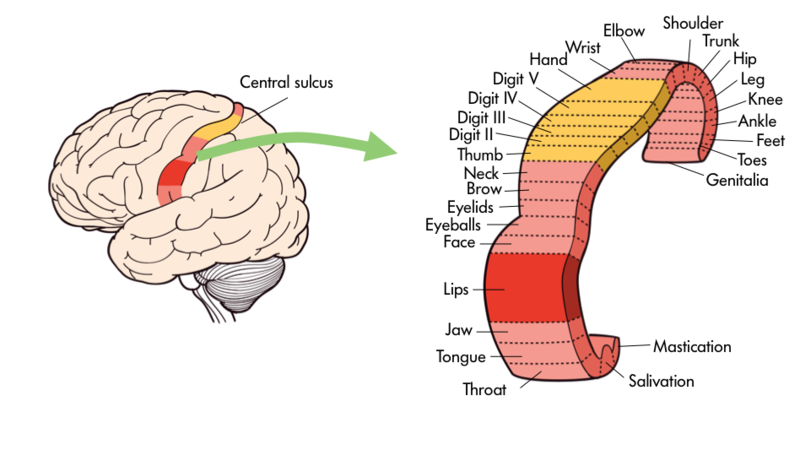

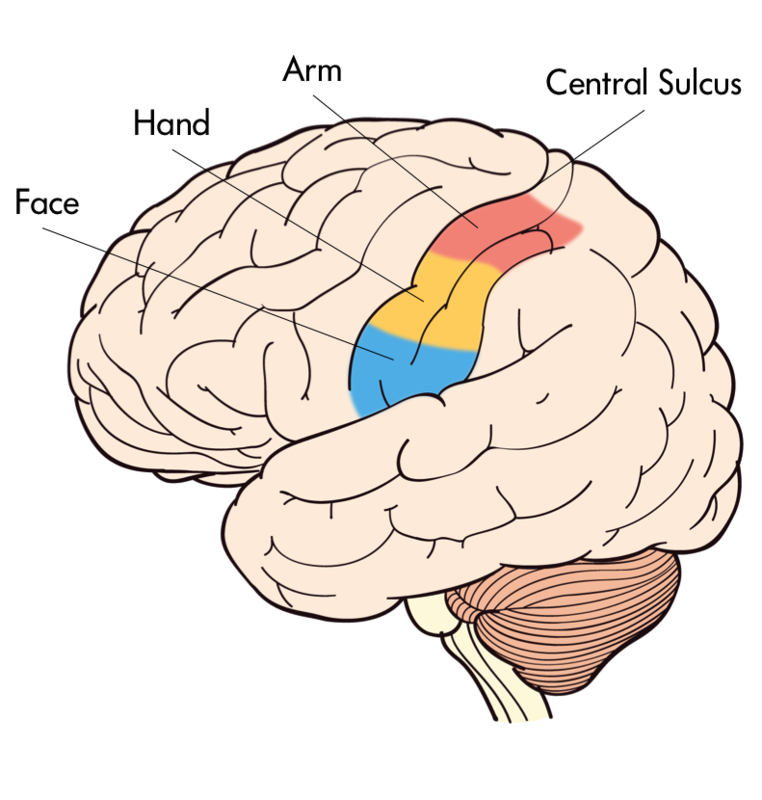

Just in front of the central sulcus lies an area called the primary motor cortex (precentral gyrus), which connects to the muscles of the body, and on volitional command moves them. From mastication to movements in the genitalia, the body map is represented on this strip (Figure 5b).

Some body parts, like fingers, thumbs, and lips, occupy a greater representation on the strip than, say, the trunk. This disproportionate representation of the body on the primary motor cortex is called the magnification factor (Rolls & Cowey, 1970) and is seen in other motor and sensory areas. At the lower end of the central sulcus, close to the lateral sulcus, lies the Broca’s area (Figure 6b) in the left frontal lobe, which is involved with language production. Damage to this part of the brain led Pierre Paul Broca, a French neuroscientist in 1861, to document many different forms of aphasias, in which his patients would lose the ability to speak or would retain partial speech impoverished in syntax and grammar (AAAS, 1880). It is no wonder that others have found subvocal rehearsal and central executive processes of working memory in this frontal lobe (Smith & Jonides, 1997, 1999).

Just behind the central gyrus, in the parietal lobe, lies the primary somatosensory cortex (Figure 6a) on the postcentral gyrus, which represents the whole body receiving inputs from the skin and muscles. The primary somatosensory cortex parallels, abuts, and connects heavily to the primary motor cortex and resembles it in terms of areas devoted to bodily representation. All spinal and some cranial nerves (e.g., the facial nerve) send sensory signals from skin (e.g., touch) and muscles to the primary somatosensory cortex. Close to the lower (ventral) end of this strip, curved inside the parietal lobe, is the taste area (secondary somatosensory cortex), which is involved with taste experiences that originate from the tongue, pharynx, epiglottis, and so forth.

Just below the parietal lobe, and under the caudal end of the lateral fissure, in the temporal lobe, lies the Wernicke’s area (Demonet et al., 1992). This area is involved with language comprehension and is connected to the Broca’s area through the arcuate fasciculus, nerve fibers that connect these two regions. Damage to the Wernicke’s area (Figure 6b) results in many kinds of agnosias; agnosia is defined as an inability to know or understand language and speech-related behaviors. So an individual may show word deafness, which is an inability to recognize spoken language, or word blindness, which is an inability to recognize written or printed language. Close in proximity to the Wernicke’s area is the primary auditory cortex, which is involved with audition, and finally the brain region devoted to smell (olfaction) is tucked away inside the primary olfactory cortex (prepyriform cortex).

At the very back of the cerebral cortex lies the occipital lobe housing the primary visual cortex. Optic nerves travel all the way to the thalamus (lateral geniculate nucleus, LGN) and then to visual cortex, where images that are received on the retina are projected (Hubel, 1995).

In the past 50 to 60 years, visual sense and visual pathways have been studied extensively, and our understanding about them has increased manifold. We now understand that all objects that form images on the retina are transformed (transduction) in neural language handed down to the visual cortex for further processing. In the visual cortex, all attributes (features) of the image, such as the color, texture, and orientation, are decomposed and processed by different visual cortical modules (Van Essen, Anderson & Felleman, 1992) and then recombined to give rise to singular perception of the image in question.

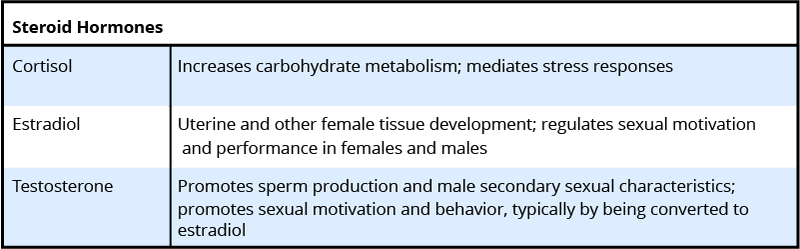

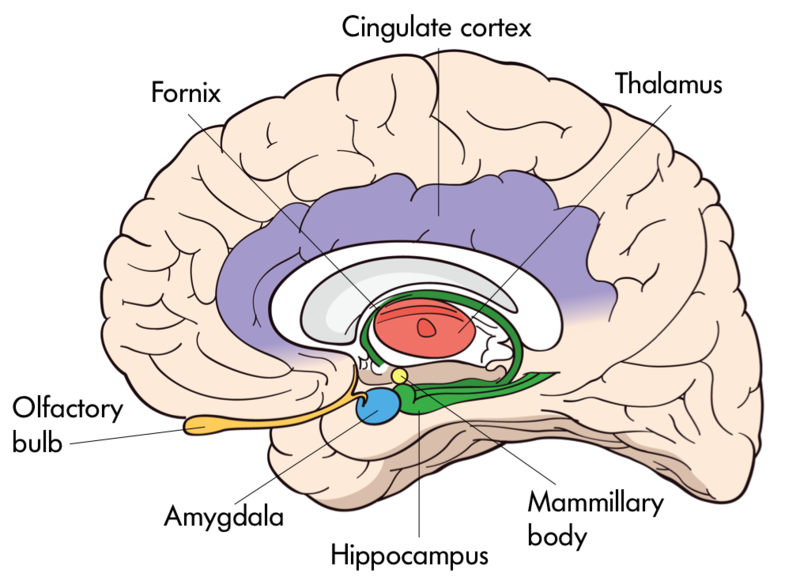

If we cut the cerebral hemispheres in the middle, a new set of structures come into view. Many of these perform different functions vital to our being. For example, the limbic system contains a number of nuclei that process memory (hippocampus and fornix) and attention and emotions (cingulate gyrus); the globus pallidus is involved with motor movements and their coordination; the hypothalamus and thalamus are involved with drives, motivations, and trafficking of sensory and motor throughputs. The hypothalamus plays a key role in regulating endocrine hormones in conjunction with the pituitary gland that extends from the hypothalamus through a stalk (infundibulum).

As we descend down the thalamus, the midbrain comes into view with superior and inferior colliculi, which process visual and auditory information, as does the substantia nigra, which is involved with notorious Parkinson’s disease, and the reticular formation regulating arousal, sleep, and temperature. A little lower, the hindbrain with the pons processes sensory and motor information employing the cranial nerves, works as a bridge that connects the cerebral cortex with the medulla, and reciprocally transfers information back and forth between the brain and the spinal cord. The medulla oblongata processes breathing, digestion, heart and blood vessel function, swallowing, and sneezing. The cerebellum controls motor movement coordination, balance, equilibrium, and muscle tone.

The midbrain and the hindbrain, which make up the brain stem, culminate in the spinal cord. Whereas inside the cerebral cortex, the gray matter (neuronal cell bodies) lies outside and white matter (myelinated axons) inside; in the spinal cord this arrangement reverses, as the gray matter resides inside and the white matter outside. Paired nerves (ganglia) exit the spinal cord, some closer in direction towards the back (dorsal) and others towards the front (ventral). The dorsal nerves (afferent) receive sensory information from skin and muscles, and ventral nerves (efferent) send signals to muscles and organs to respond.

Gray Versus White Matter

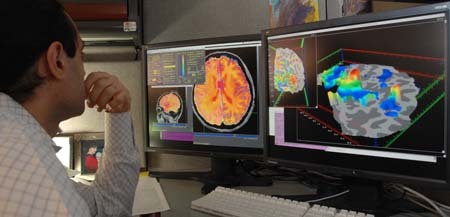

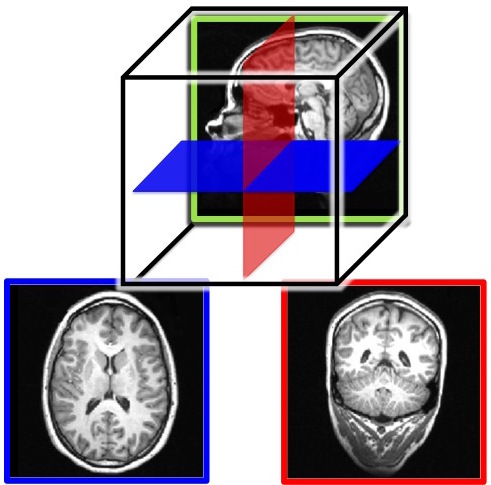

The cerebral hemispheres contain both grey and white matter, so called because they appear grayish and whitish in dissections or in an MRI (magnetic resonance imaging; see, “Studying the Human Brain”). The gray matter is composed of the neuronal cell bodies (see module, “Neurons”). The cell bodies (or soma) contain the genes of the cell and are responsible for metabolism (keeping the cell alive) and synthesizing proteins. In this way, the cell body is the workhorse of the cell. The white matter is composed of the axons of the neurons, and, in particular, axons that are covered with a sheath of myelin (fatty support cells that are whitish in color). Axons conduct the electrical signals from the cell and are, therefore, critical to cell communication. People use the expression “use your gray matter” when they want a person to think harder. The “gray matter” in this expression is probably a reference to the cerebral hemispheres more generally; the gray cortical sheet (the convoluted surface of the cortex) being the most visible. However, both the gray matter and white matter are critical to proper functioning of the mind. Losses of either result in deficits in language, memory, reasoning, and other mental functions. See Figure 3 for MRI slices showing both the inner white matter that connects the cell bodies in the gray cortical sheet.

Figure 3. MRI slices of the human brain. Both the outer gray matter and inner white matter are visible in each image. The brain is a three-dimensional (3-D) structure, but an image is two-dimensional (2-D). Here, we show example slices of the three possible 2-D cuts through the brain: a saggital slice (top image), a horizontal slice (bottom left), which is also known as a transverse or axial slice, and a coronal slice (bottom right). The bottom two images are color-coded to match the illustration of the relative orientations of the three slices in the top image.

Studying the Nervous System

The study of the nervous system involves anatomical and physiological techniques that have improved over the years in efficiency and caliber. Clearly, gross morphology of the nervous system requires an eye-level view of the brain and the spinal cord. However, to resolve minute components, optical and electron microscopic techniques are needed.

Light microscopes and, later, electron microscopes have changed our understanding of the intricate connections that exist among nerve cells. For example, modern staining procedures (immunocytochemistry) make it possible to see selected neurons that are of one type or another or are affected by growth. With better resolution of the electron microscopes, fine structures like the synaptic cleft between the pre- and post-synaptic neurons can be studied in detail. Along with the neuroanatomical techniques, a number of other methodologies aid neuroscientists in studying the function and physiology of the nervous system. These methods will be explored later on in the chapter.

Understanding the nervous system has been a long journey of inquiry, spanning several hundreds of years of meticulous studies carried out by some of the most creative and versatile investigators in the fields of philosophy, evolution, biology, physiology, anatomy, neurology, neuroscience, cognitive sciences, and psychology. Despite our profound understanding of this organ, its mysteries continue to surprise us, and its intricacies make us marvel at this complex structure unmatched in the universe.

Learning Objectives

By the end of this section, you will be able to:

- Explain the functions of the spinal cord

- Identify the hemispheres of the brain

- Name and describe the basic function of the four cerebral lobes: occipital, temporal, parietal, and frontal cortex.

- Describe a split-brain patient and at least two important aspects of brain function that these patients reveal.

The brain is a remarkably complex organ comprised of billions of interconnected neurons and glia. It is a bilateral, or two-sided, structure that can be separated into distinct lobes. Each lobe is associated with certain types of functions, but, ultimately, all of the areas of the brain interact with one another to provide the foundation for our thoughts and behaviors. In this section, we discuss the overall organization of the brain and the functions associated with different brain areas, beginning with what can be seen as an extension of the brain, the spinal cord.

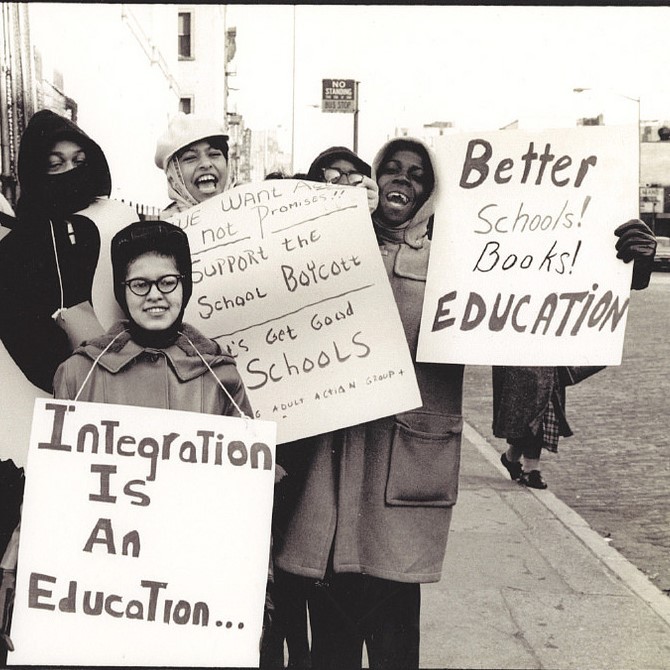

The earliest records of a psychological experiment go all the way back to the Pharaoh Psamtik I of Egypt in the 7th Century B.C. [Image: Neithsabes, CC0 Public Domain, https://goo.gl/m25gce]

The earliest records of a psychological experiment go all the way back to the Pharaoh Psamtik I of Egypt in the 7th Century B.C. [Image: Neithsabes, CC0 Public Domain, https://goo.gl/m25gce]