27

Learning Objectives

By the end of this section, you will be able to:

- Describe the basic anatomy and function of the auditory system

- Explain how we encode and perceive pitch

- Discuss how we localize sound

- Discuss Deaf culture and ableism

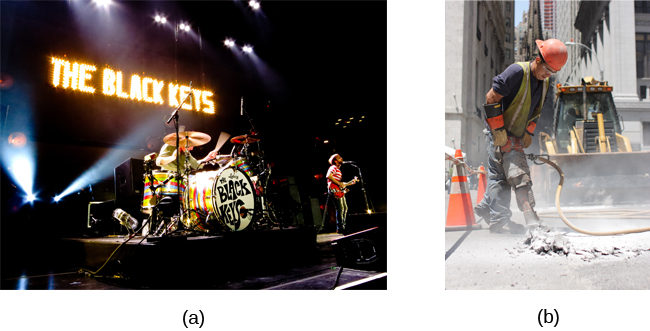

Our auditory system converts pressure waves into meaningful sounds. This translates into our ability to hear the sounds of nature, to appreciate the beauty of music, and to communicate with one another through spoken language. This section will provide an overview of the basic anatomy and function of the auditory system. It will include a discussion of how the sensory stimulus is translated into neural impulses, where in the brain that information is processed, how we perceive pitch, and how we know where sound is coming from. It will also discuss Deaf culture and the perceptions of hearing individuals about deafness.

Anatomy of the Auditory System

The ear can be separated into multiple sections. The outer ear includes the pinna, which is the visible part of the ear that protrudes from our heads, the auditory canal, and the tympanic membrane, or eardrum. The middle ear contains three tiny bones known as the ossicles, which are named the malleus (or hammer), incus (or anvil), and the stapes (or stirrup). The inner ear contains the semi-circular canals, which are involved in balance and movement (the vestibular sense), and the cochlea. The cochlea is a fluid-filled, snail-shaped structure that contains the sensory receptor cells (hair cells) of the auditory system.

Sound waves travel along the auditory canal and strike the tympanic membrane, causing it to vibrate. This vibration results in movement of the three ossicles. As the ossicles move, the stapes presses into a thin membrane of the cochlea known as the oval window. As the stapes presses into the oval window, the fluid inside the cochlea begins to move, which in turn stimulates hair cells, which are auditory receptor cells of the inner ear embedded in the basilar membrane. The basilar membrane is a thin strip of tissue within the cochlea.

The activation of hair cells is a mechanical process: the stimulation of the hair cell ultimately leads to activation of the cell. As hair cells become activated, they generate neural impulses that travel along the auditory nerve to the brain. Auditory information is shuttled to the inferior colliculus, the medial geniculate nucleus of the thalamus, and finally to the auditory cortex in the temporal lobe of the brain for processing. Like the visual system, there is also evidence suggesting that information about auditory recognition and localization is processed in parallel streams (Rauschecker & Tian, 2000; Renier et al., 2009).

Pitch Perception

Different frequencies of sound waves are associated with differences in our perception of the pitch of those sounds. Low-frequency sounds are lower pitched, and high-frequency sounds are higher pitched. How does the auditory system differentiate among various pitches?

Several theories have been proposed to account for pitch perception. We’ll discuss two of them here: temporal theory and place theory. The temporal theory of pitch perception asserts that frequency is coded by the activity level of a sensory neuron. This would mean that a given hair cell would fire action potentials related to the frequency of the sound wave. While this is a very intuitive explanation, we detect such a broad range of frequencies (20–20,000 Hz) that the frequency of action potentials fired by hair cells cannot account for the entire range. Because of properties related to sodium channels on the neuronal membrane that are involved in action potentials, there is a point at which a cell cannot fire any faster (Shamma, 2001).

The place theory of pitch perception suggests that different portions of the basilar membrane are sensitive to sounds of different frequencies. More specifically, the base of the basilar membrane responds best to high frequencies and the tip of the basilar membrane responds best to low frequencies. Therefore, hair cells that are in the base portion would be labeled as high-pitch receptors, while those in the tip of basilar membrane would be labeled as low-pitch receptors (Shamma, 2001).

In reality, both theories explain different aspects of pitch perception. At frequencies up to about 4000 Hz, it is clear that both the rate of action potentials and place contribute to our perception of pitch. However, much higher frequency sounds can only be encoded using place cues (Shamma, 2001).

Test Your Understanding

Sound Localization

The ability to locate sound in our environments is an important part of hearing. Localizing sound could be considered similar to the way that we perceive depth in our visual fields. Like the monocular and binocular cues that provided information about depth, the auditory system uses both monaural (one-eared) and binaural (two-eared) cues to localize sound.

Each pinna interacts with incoming sound waves differently, depending on the sound’s source relative to our bodies. This interaction provides a monaural cue that is helpful in locating sounds that occur above or below and in front or behind us. The sound waves received by your two ears from sounds that come from directly above, below, in front, or behind you would be identical; therefore, monaural cues are essential (Grothe, Pecka, & McAlpine, 2010).

Binaural cues, on the other hand, provide information on the location of a sound along a horizontal axis by relying on differences in patterns of vibration of the eardrum between our two ears. If a sound comes from an off-center location, it creates two types of binaural cues: interaural level differences and interaural timing differences. Interaural level difference refers to the fact that a sound coming from the right side of your body is more intense at your right ear than at your left ear because of the attenuation of the sound wave as it passes through your head. Interaural timing difference refers to the small difference in the time at which a given sound wave arrives at each ear. Certain brain areas monitor these differences to construct where along a horizontal axis a sound originates (Grothe et al., 2010).

Deaf and Hard of Hearing

15% of American Adults report some trouble hearing (almost 40 million Americans). Deafness is the partial or complete inability to hear. Some people are born deaf, which is known as congenital deafness. Many others begin to suffer from conductive hearing loss because of age, genetic predisposition, or environmental effects, including exposure to extreme noise, physical trauma or injury, certain illnesses, or damage due to toxins (such as those found in certain solvents and metals).

Given the mechanical nature by which the sound wave stimulus is transmitted from the eardrum through the ossicles to the oval window of the cochlea, some degree of difficulty is inevitable for most hearing individuals. With conductive hearing loss, hearing problems are associated with a lack of vibration of the eardrum and/or movement of the ossicles. These problems are often dealt with through devices like hearing aids that amplify incoming sound waves to make the vibration of the eardrum and movement of the ossicles more likely to occur.

When the hearing problem is associated with a failure to transmit neural signals from the cochlea to the brain, it is called sensorineural hearing loss. One disease that results in sensorineural hearing loss is Ménière’s disease. Although not well understood, Ménière’s disease results in a degeneration of inner ear structures that can lead to hearing loss, tinnitus (constant ringing or buzzing), vertigo (a sense of spinning), and an increase in pressure within the inner ear (Semaan & Megerian, 2011). This kind of loss cannot be treated with hearing aids, but some individuals might be candidates for a cochlear implant as a treatment option. Cochlear implants are electronic devices that consist of a microphone, a speech processor, and an electrode array. The device receives incoming sound information and directly stimulates the auditory nerve to transmit information to the brain.

Deaf Culture

You will notice that the word “deaf” is capitalized in a seemingly inconsistent way throughout the following text. However, this is by design and by request of the Deaf community. To say “deaf” with a “d” refers to someone’s ability to hear; this can be on a spectrum of some hearing or no hearing. While “Deaf” with a “D” refers to someone who identifies as culturally deaf. This person will often use a signed communication system and participate in the rich culture and history of deafness. A person can be “deaf” and not “Deaf” (Ex. a deaf child born to hearing parents who does not learn to sign nor participate in Deaf culture), and a person can be “Deaf” but not “deaf” (A hearing child of a Deaf adult who engages in Deaf culture by signing or being involved in the community). If this seems confusing, recognize that much of Deaf identity comes from a combination of self and community identification, how a person presents themselves, and how the community will accept and identify them. Suppose you learned a sign language like American Sign Language (ASL) in Highschool. In that case, you can participate in conversation with the Deaf community. You may even be aware of cultural traditions and practices. However, you are not considered a part of the Deaf community. Sign language interpreters (someone who bridges the gap between hearing and deaf conversationalists) who have studied sign language and Deaf culture for years to obtain advanced fluency and certification are not considered “Deaf” unless they were born into the Deaf world. A history of oppression and ableism toward people who are deaf or hard of hearing has created a strong and empowered group resolute in their traditions. Deaf culture is rich with history, traditions, nuances, and a strong sense of pride.

In the United States and other places worldwide, Deaf people have their own language, schools, and customs. This is called Deaf culture. In the United States, deaf individuals often communicate using American Sign Language (ASL); ASL has no verbal component and is based entirely on five parameters of handshape, palm orientation, movement, location, and facial expression or non-manual signals (like raising or lowing the eyebrows).

Terms like “Hearing Loss” and “Hearing Impaired” or other phrases that infer a negative connotation, deafness is treated in the medical community as a disability. It is something that needs to be “fixed.” Deaf people do not see deafness as a “loss” of hearing but a “gain” of community and diversity. The term “Deaf Gain” is a popular phrase used by the Deaf community to express pride in their language, community, resilience, and cultural traditions.

Language Acquisition and Hearing Loss

More than 90% of deaf children are born to hearing parents, while 90% of children born to deaf parents can hear. This leaves many of these children to occupy a space between the “Deaf World” and the “Hearing World.” When a child is diagnosed as deaf, parents have difficult decisions to make. Should the child be enrolled in mainstream schools and taught to verbalize and read lips to better live in the hearing world? Or should the child be sent to a school for deaf children to learn ASL and have significant exposure to Deaf culture and the Deaf world? Do you think there might be differences in how parents approach these decisions depending on whether they are hearing or deaf?

Hearing children of Deaf parents will traditionally learn a signed visual language like American Sign Language as their primary or first language. Because there is no barrier for sighted individuals, anyone can learn to communicate using sign language (even those who are blind can learn to sign with some basic modification!). These hearing children will then learn their regional or national language as their second language to communicate outside of their home and immediate community. These Children of Deaf Adults or CODAs are integral and valued members of the Deaf community and actively participate in the Deaf and hearing world.

Most hearing parents of deaf children will struggle with deciding how best to raise a deaf child in a hearing world. The discrimination against the Deaf child will often start immediately following mandatory hearing tests performed on newborns with the phrase “I’m so sorry, your child is deaf.” This instantly tells parents their child’s deafness is negative and should be mourned. Parents will learn about cochlear implants, and their child’s deafness will be approached as a disability without cultural context or outreach opportunities presented to parents to connect with the Deaf community. Despite exhaustive research, many doctors and audiologists are unaware of or discriminatory against the effectiveness of American Sign Language or signed communication systems. Most medical programs will teach their students about “fixes” for deafness, treating it like a disability rather than a cultural group. They will discuss implants but do not teach medical students about Sign Language or Deaf culture.

Because of this, 70% of hearing parents of deaf children will never formally learn a signed language like American Sign Langauge to communicate with their children, even if that child has learned to sign through an outreach program. Instead, many will learn a few basic signs and “home signs” (made-up signs that have no meaning outside of the family unit or immediate community) which will allow them to communicate basic ideas and concepts. Most hearing parents will either elect not to develop an advanced communication system with their deaf child or will elect for their child to learn to communicate in the “hearing world .”Many parents have elected to have their children learn an oral (spoken) method of communication like lipreading. Alternatively, they may elect their children to receive a cochlear implant. This advanced technology allows sound to be related directly to the brain, bypassing the source of the hearing difficulties discussed above. While many deaf children have found success in oral methods, research has shown they are most effective for children who have already orally acquired the foundations of their regional language before becoming deaf or, in the case of implants, have the children complete the required surgery before their 5th birthday.

Watch this video about language acquisition, deprivation, and ableism:

How are negative stereotypes about groups started? Why are they so prevalent? What groups are subject to negative stereotypes? Which groups are not subject to negative stereotypes?

Cochlear Implants

The cochlear implant is a moral and cultural debate topic within the Deaf community. Some believe the child is too young to understand their Deafness or consent to the surgery (or to consent to “become hearing”). Others feel it will rob the child of the deaf experience and alter a fundamental part of their identity. Others believe that an implant is a form of Deaf erasure and ableism and further oppresses those who choose to embrace their Deafness by reinforcing the idea that it is a disability to be “fixed”. The counter-argument is found in others who feel that having a cochlear implant will benefit the child and will allow them to interact with their hearing family and hearing community with fewer outside accommodations. They believe the child will succeed more in the hearing world than in the Deaf world. While there are genuine concerns on both sides, the ableist oppression of the Deaf plays a large part in the discussion. It is essential to recognize and will be discussed in the section on ableism and Deaf resilience.

Language acquisition is critical to any child’s mental and physical well-being. Language allows a person to develop metacognition, an awareness of thoughts and thought processes, it helps to develop and regulate executive functions like memory and behavior, and it helps an individual to develop a sense of others’ thoughts and emotions, which will lead to strong relationships and empathy. The foundational tools of language acquisition for children occur before the age of five. Studies have shown success in introducing hearing and deaf children to Signed Langauge,. Early studies suggest both groups can benefit from signing, as it bypasses a baby’s inability to vocalize sounds accurately and instead allows them to communicate their thoughts earlier, leading to more advanced vocabulary and language skills before school begins.

If signed language is the most accessible option for communication between deaf and hearing individuals, why don’t more people sign? Why would parents choose the hearing world over the deaf world? Why are Deaf parents more likely to encourage dual citizenship in the deaf and hearing world? Is deafness a disability? Are all differently-abled people disabled? What makes someone disabled? Is disability black and white?

Watch this video where Deaf actress Marlee Matlin (Children of a Lesser God, CODA) discusses the cultural controversy of Cochlear Implant in the Deaf Community

Now, watch this video where several deaf individuals discuss their own experiences with deafness and their Cochlear implant

Ableism

Ableism, or thinking that a disability (temporary or permanent) makes a person inferior or “less than” another group based on a person’s perceived abilities. It is a form of oppression that many disabled or differently-abled individuals have faced and continue to face. From ancient times, the deaf have been viewed as disabled, lesser, and incapable of reason, and few were empowered to lead traditional lives. “Deaf and Dumb” has historically been regarded as an appropriate way of describing someone who does not hear; the first formal sign language was developed by Abbe Charles-Michel de l’Épée and published under the title “Instruction of deaf and dumb by means of methodical signs.” This discriminatory and derogative phrase has been recognized as ableist. However, it is still used in popular media along with the phrase “Are you deaf?” an exclamation of frustration used towards someone who is not comprehending an idea in verbal conversation.

Alexander Graham Bell, the inventor of the telephone, held many destructive and oppressive views about the deaf, including that signed language was inferior to spoken language. Despite having a wife who was deaf, he strongly lobbied for the banning of signed languages. Many sources suggest that he was in favor of eugenics (a method of genetic purification) by supporting the regulation of marriage and procreation for people who are deaf or hard of hearing.

Signed Language Ban

Despite the successful establishment of numerous schools for the Deaf internationally, in 1880, the Second International Congress on Education of the Deaf in Milan, Italy, passed a resolution banning all signed languages from Deaf schools internationally. Instead, a proprietary oral method of instruction would become the only acceptable teaching method. This led to the firing of many prominent deaf instructors, forced oral instruction for all students, and students were severely punished for using signed language.

Signing now had to be done in secret, with older students risking severe (often physical) punishment to continue the traditions of sign language. The hands of deaf individuals were often tied in punishment to ensure they would not sign with one another. Despite the research of the time showing the low success rates of the oral method, it was the only way the official method the deaf would be taught to communicate. It is important to note that Gallaudet University, the first 4-year university for the Deaf, refused the ban and kept signed language as their primary teaching language. This decision and the “secret signing” of students globally are widely praised for the survival of signed languages like ASL.

It wasn’t until 1960 that William Stokoe’s research on American Signed Language was published, and Americal Sign Language was officially recognized as a legitimate language, equivalent and equal to spoken language. However, it wasn’t until 2010 that the convening Congress issued a formal apology and recognized signed languages, 130 years after its original ruling.

Deaf Resilience

The Deaf are resilient; their history may have been plagued with ableism and oppression, but they are not defined by it. Every time you see the famous huddle during a football game, you can thank the deaf football players at Gallaudet, who needed a creative way to hide signs from opposing teams. Martha’s Vineyard was originally a thriving Deaf community due to several families with hereditary deafness, the island became a hub of the Deaf community, and a unique derivative of sign language was used there.

Influential and amazing people throughout history who are deaf or hard of hearing:

Helen Keller (Deaf-blind advocate for literacy)

Juliette Gordon Law (founder of the Girl Scouts)

Heather Whitestone (Miss America)

Marlee Matlin (Advocate, first Deaf Oscar winner, Children of a Lesser God)

Sue Thomas (Undercover Specialist for the FBI)

Casar Jacobson (Actress, UN Champion, Scientist)

Dr. Candace McCullough (first practicing Deaf psychologist)

Troy Chapman (First Deaf black actor to with NYC Best actor, founding member “Dangerous Signs” ASL Poetry group)

Dr. Simon J. Carmel (famous magician)

Bob Hiltermann (founder of Deaf West Theatre)

James Lee Taylor III (rapper)

Stephanie Nogueras (Actress, Switched at Birth)

Nyle DiMarco (contestant on America’s Next Top Model and Dancing with the Stars)

Troy Kotsur (Arizona native, first Deaf male Oscar winner, CODA)

And many, many, many more!

What jobs do you think deaf people have? Are there any jobs that you feel deaf people should not have? Why? What accommodations could be made for these jobs?

Summary

Sound waves are funneled into the auditory canal and cause vibrations of the eardrum; these vibrations move the ossicles. As the ossicles move, the stapes presses against the oval window of the cochlea, which causes fluid inside the cochlea to move. As a result, hair cells embedded in the basilar membrane become enlarged, which sends neural impulses to the brain via the auditory nerve.

Pitch perception and sound localization are important aspects of hearing. Our ability to perceive pitch relies on both the firing rate of the hair cells in the basilar membrane as well as their location within the membrane. In terms of sound localization, both monaural and binaural cues are used to locate where sounds originate in our environment.

Individuals can be born deaf, or they can develop deafness as a result of age, genetic predisposition, and/or environmental causes. Clinically deafness results from a failure of the vibration of the eardrum or the resultant movement of the ossicles is called conductive hearing loss*. Deafness that involves a failure of the transmission of auditory nerve impulses to the brain is called sensorineural hearing loss*. Deaf people have a rich culture and history. Despite a history of oppression and ableism, the community is strong, with many viewpoints and diverse perspectives and a history of inspiring community members who have left a lasting impact in both the Deaf and hearing worlds. Hearing parents of deaf children face tough and often uninformed decisions in the current medical system, but all deaf and Deaf children can be empowered to lead equally fulfilling lives to their hearing peers, whether they choose to use cochlear implants, an oral technique, or signed language. While hardly an exhaustive overview of the topic, this brief discussion of Deaf Culture is a foundation for future exploration of this topic.

*Despite the negative stereotypes associated with the term, “hearing loss” is still widely used throughout the medical community and is included here to avoid confusion for students continuing in this field.

Review Questions

Critical Thinking Question

Given what you’ve read about sound localization, from an evolutionary perspective, how does sound localization facilitate survival?

Sound localization would have allowed early humans to locate prey and protect themselves from predators.

How can temporal and place theories both be used to explain our ability to perceive the pitch of sound waves with frequencies up to 4000 Hz?

Pitch of sounds below this threshold could be encoded by the combination of the place and firing rate of stimulated hair cells. So, in general, hair cells located near the tip of the basilar membrane would signal that we’re dealing with a lower-pitched sound. However, differences in firing rates of hair cells within this location could allow for fine discrimination between low-, medium-, and high-pitch sounds within the larger low-pitch context.

Personal Application Question

Find a Deaf content creator (or use one of the suggestions below) and watch some of their content. What are your thoughts? What makes the videos easy or difficult for you to follow? Don’t forget if you like their content, give them a follow!

TikTok:

Phelan Conheady (@signinngwolf)

Kaitlyn Gagnon (@kaitcanthearyou)

Scarlet Watters (@scarlet_may.1)

Youtube:

Sign Duo

ASL Stew

Jessica Kellgren-Fozard

Seek The World

Rikki Poynter