What people need most when confronted with a claim that may not be 100% true is things they can do to get closer to the truth. They need something I have decided to call “moves.”

Moves accomplish intermediate goals in the fact-checking process. They are associated with specific tactics. Here are the four moves this guide will hinge on:

- Check for previous work: Look around to see if someone else has already fact-checked the claim or provided a synthesis of research.

- Go upstream to the source: Go “upstream” to the source of the claim. Most web content is not original. Get to the original source to understand the trustworthiness of the information.

- Read laterally: Read laterally. Once you get to the source of a claim, read what other people say about the source (publication, author, etc.). The truth is in the network.

- Circle back: If you get lost, hit dead ends, or find yourself going down an increasingly confusing rabbit hole, back up and start over knowing what you know now. You’re likely to take a more informed path with different search terms and better decisions.

In general, you can try these moves in sequence. If you find success at any stage, your work might be done.

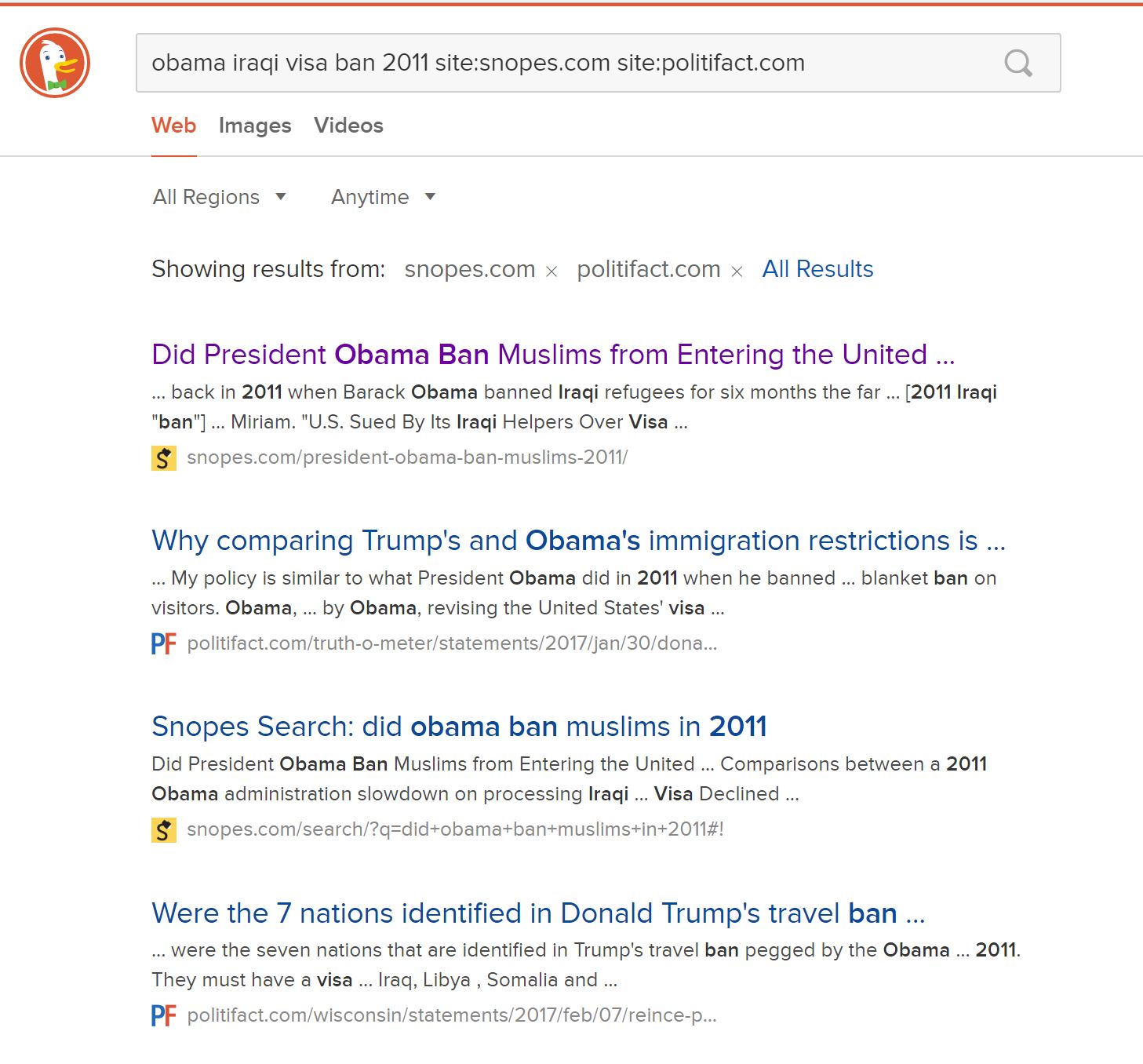

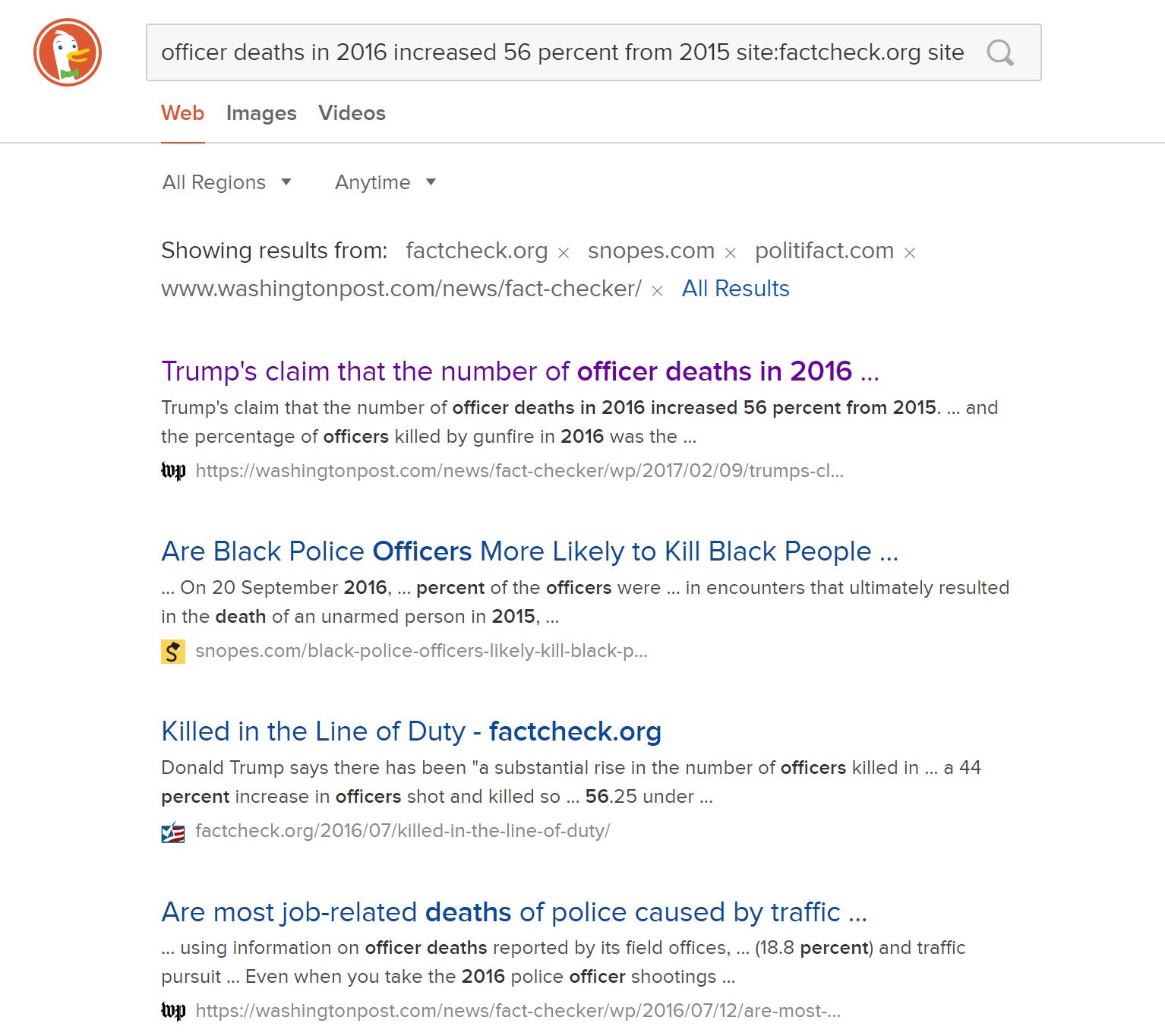

When you encounter a claim you want to check, your first move might be to see if sites like Politifact, Snopes, or even Wikipedia have researched the claim (Check for previous work).

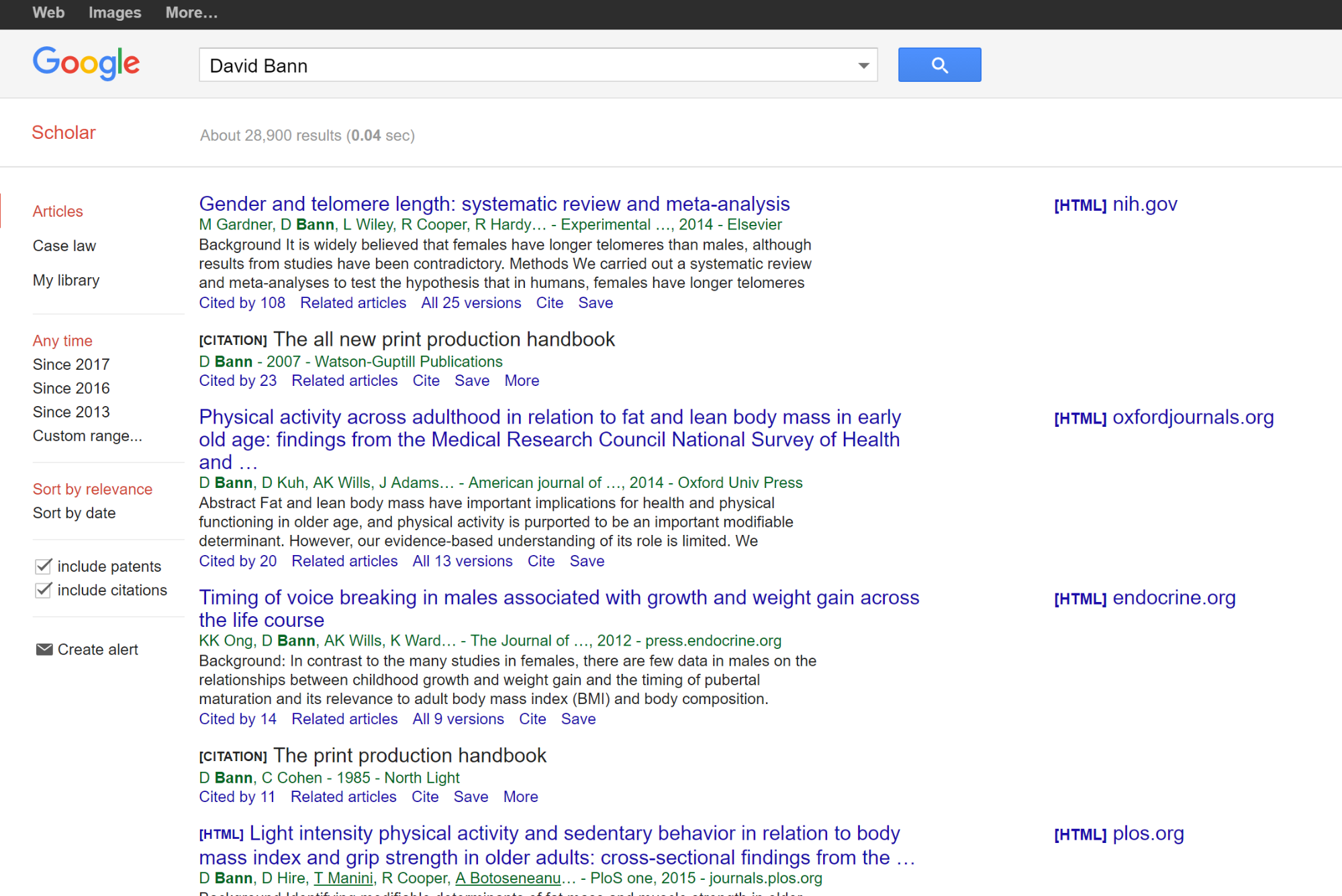

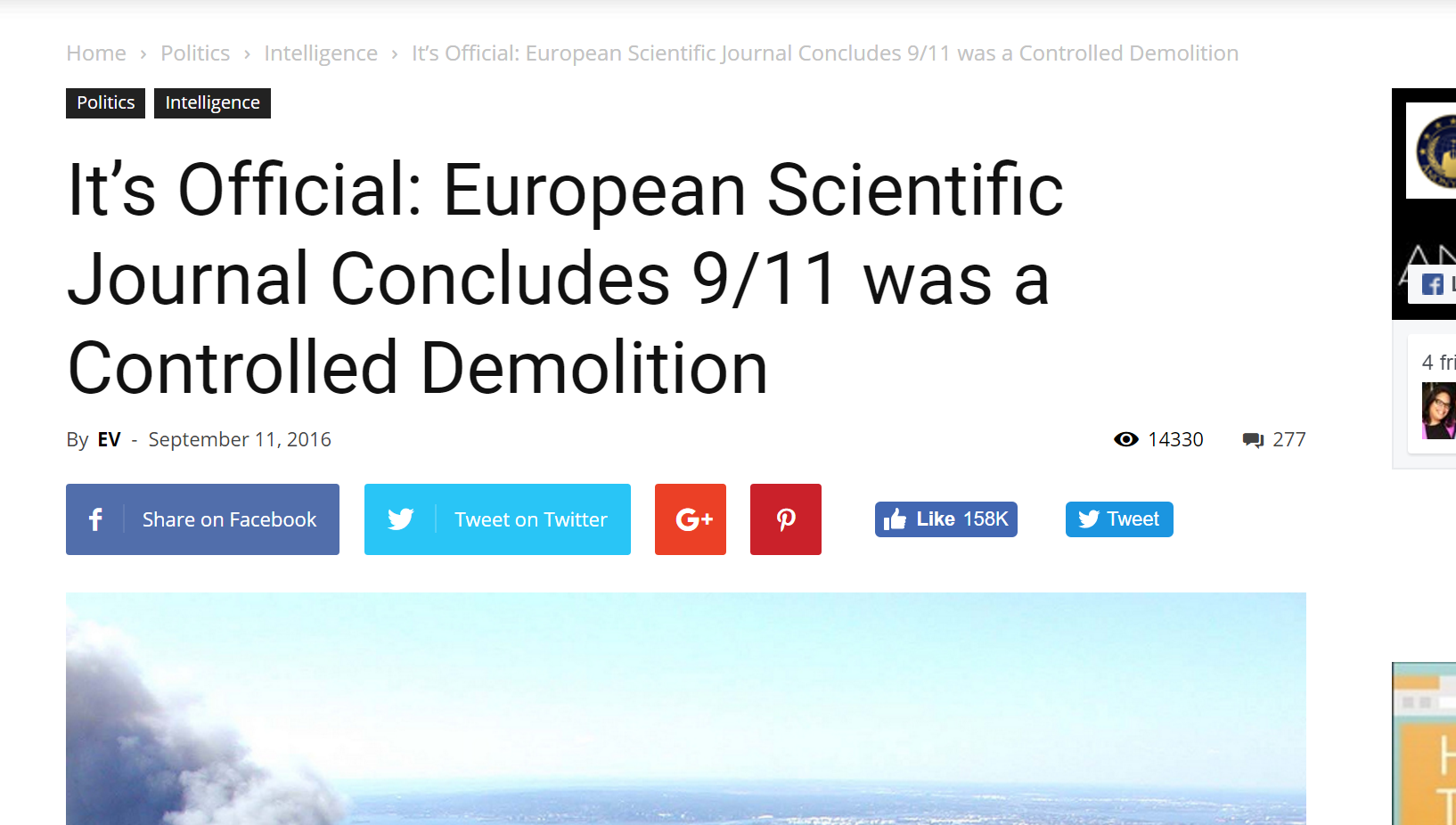

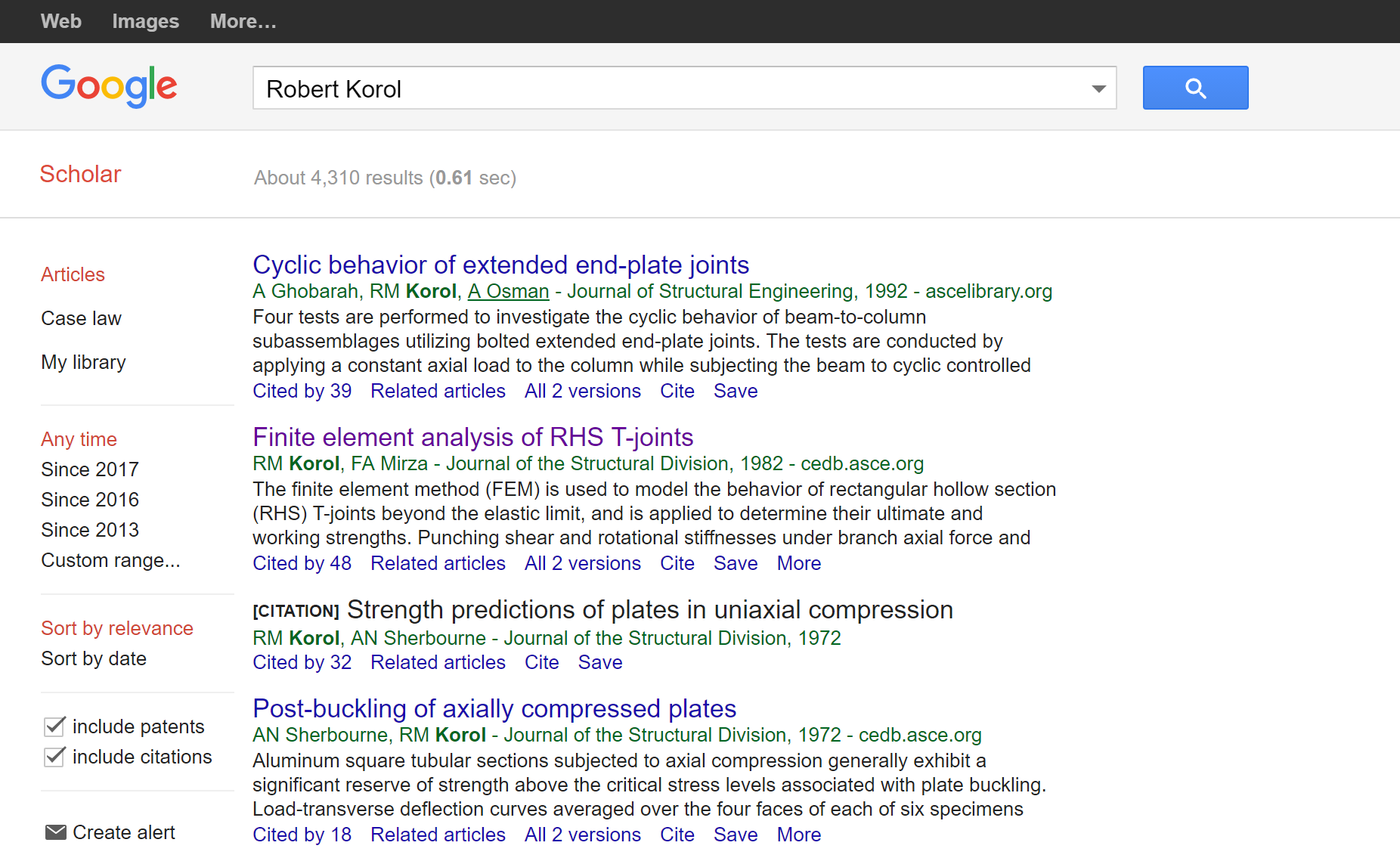

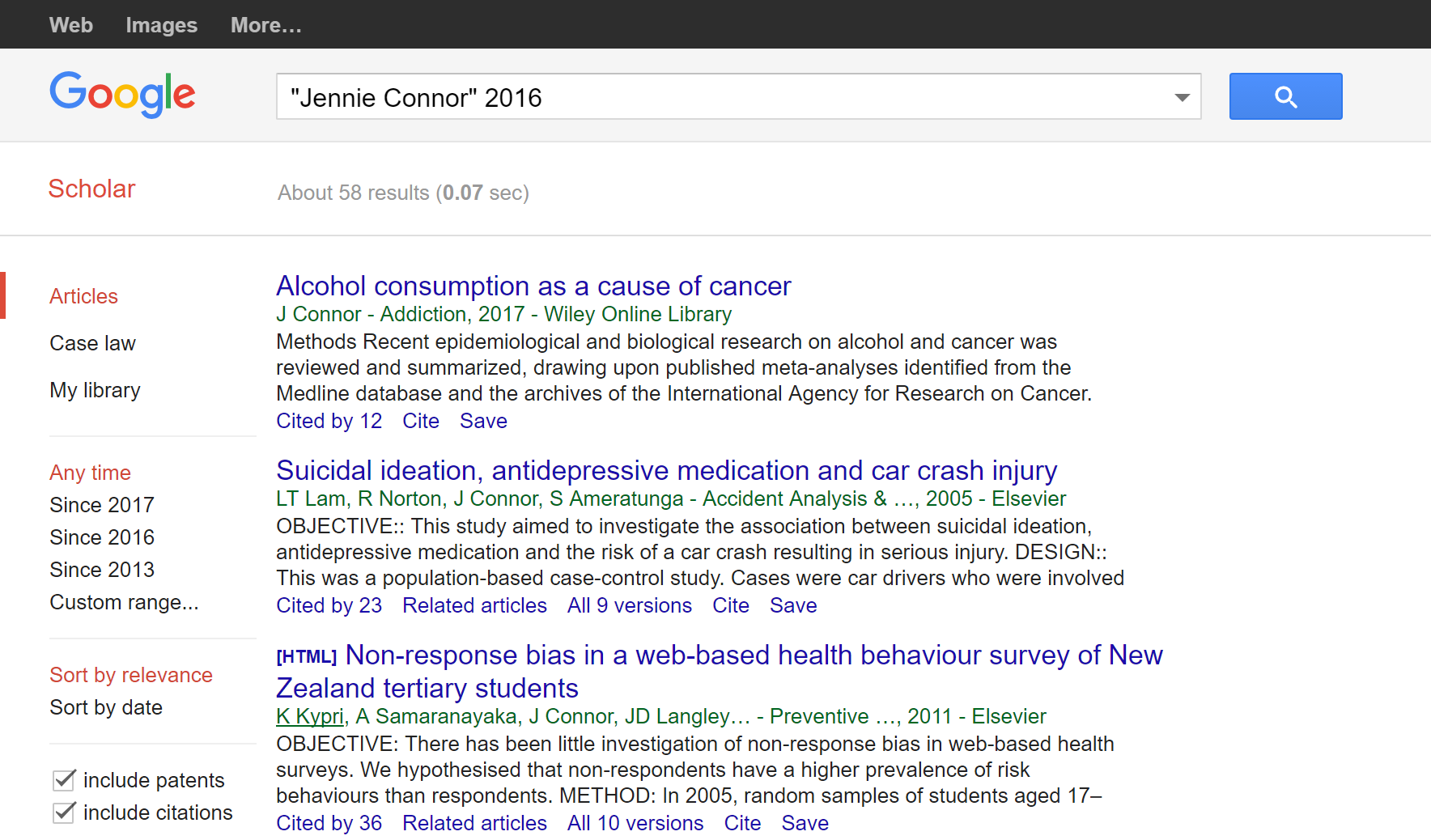

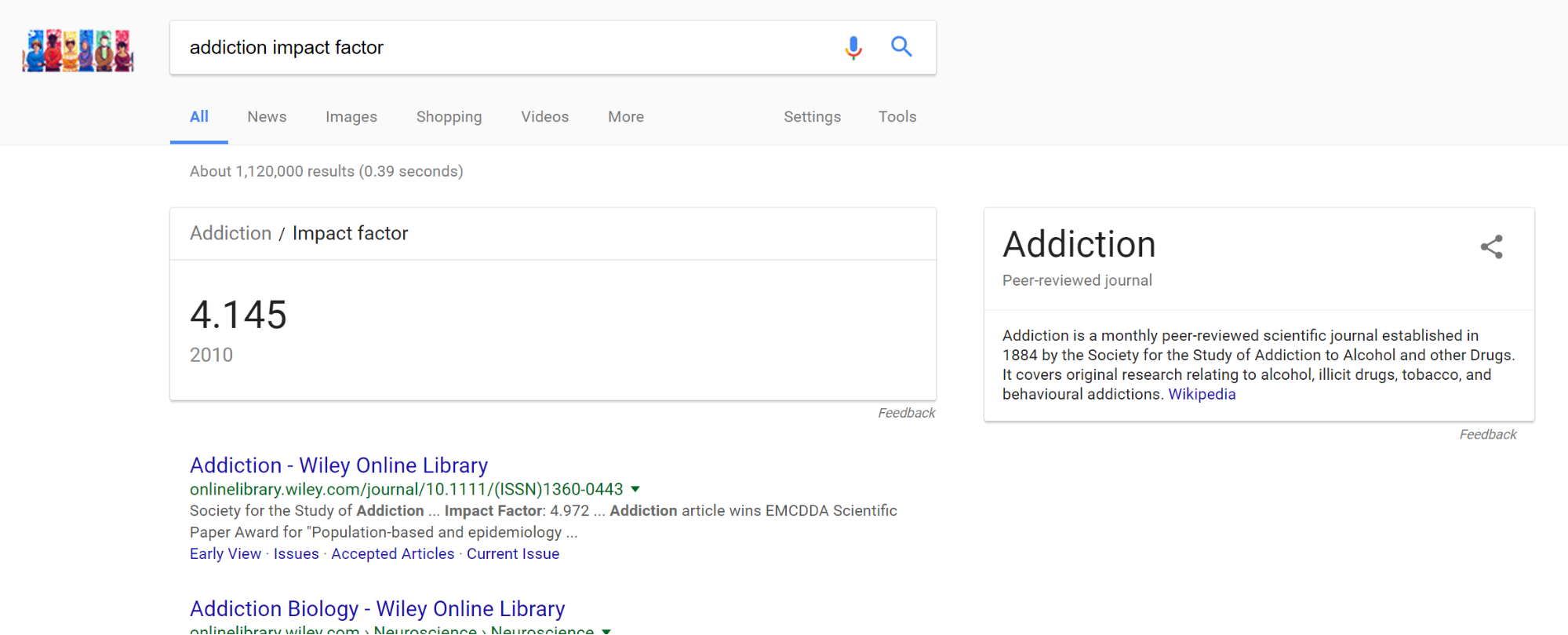

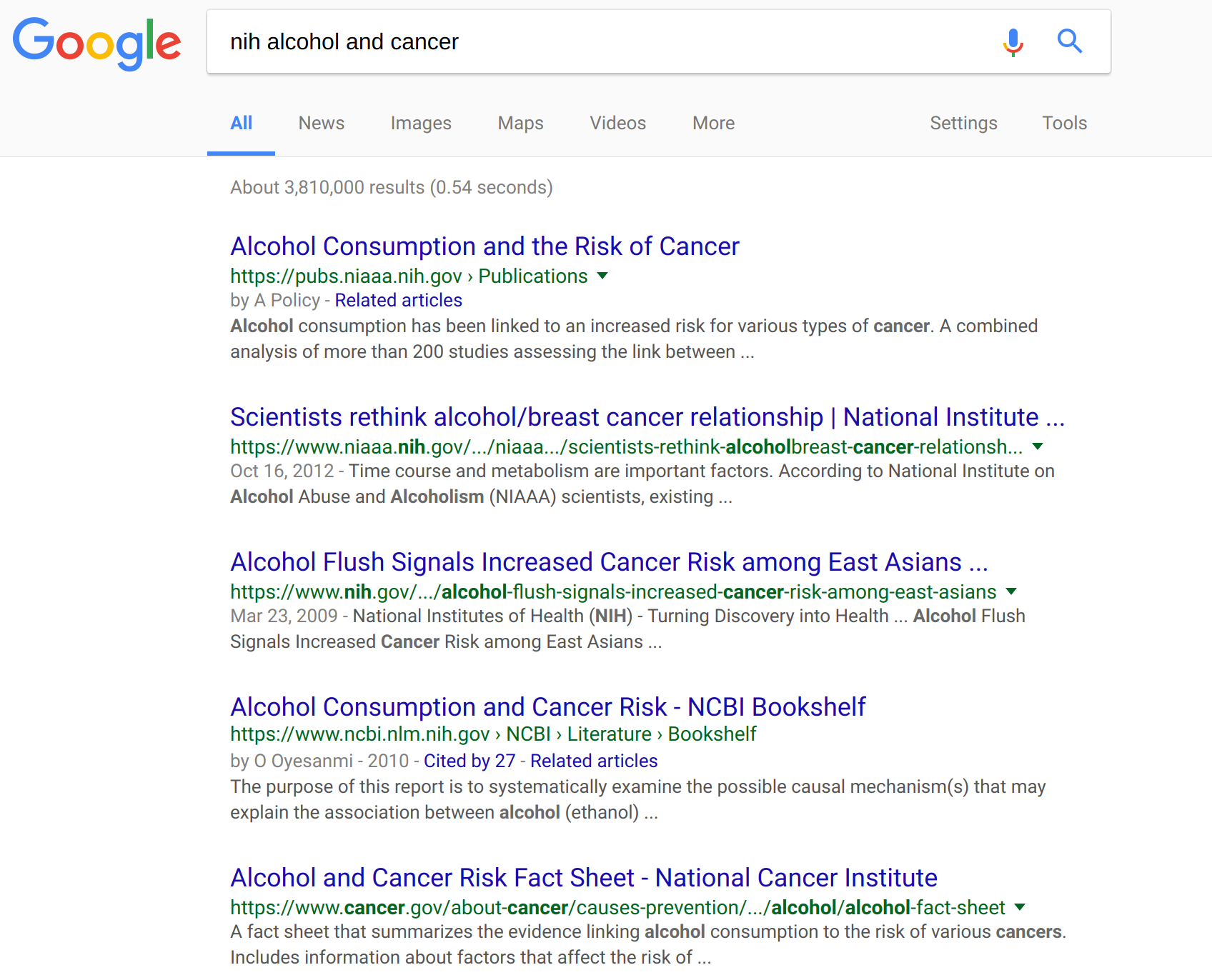

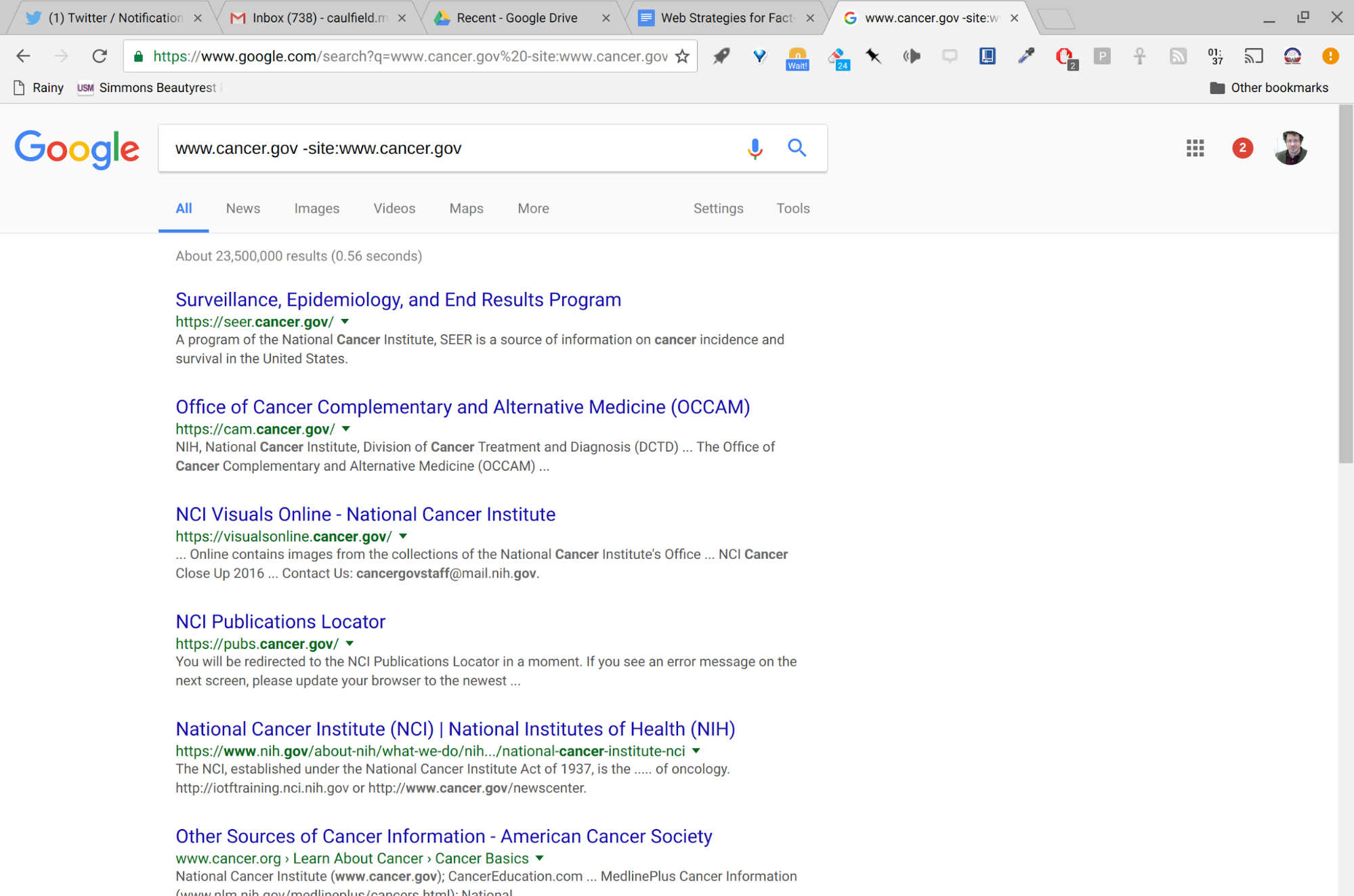

If you can’t find previous work on the claim, start by trying to trace the claim to the source. If the claim is about research, try to find the journal it appeared in. If the claim is about an event, try to find the news publication in which it was originally reported (Go upstream).

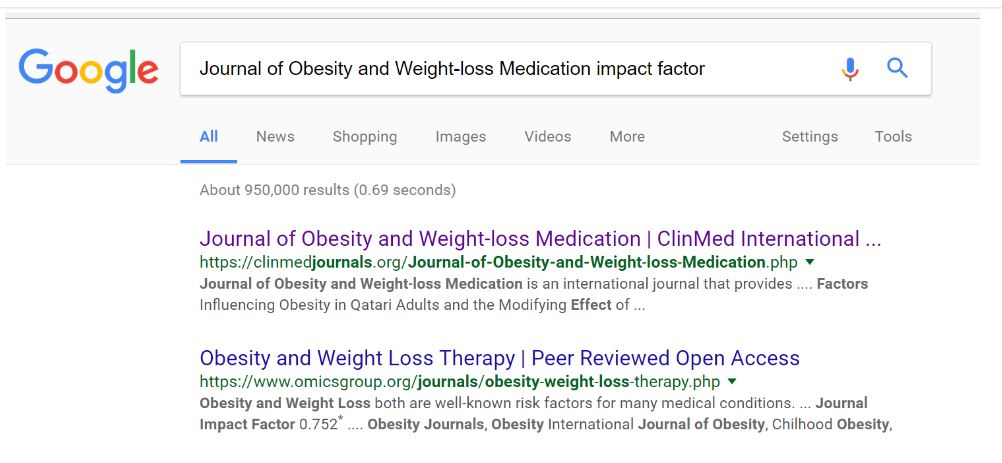

Maybe you get lucky and the source is something known to be reputable, such as the journal Science or the newspaper the New York Times. Again, if so, you can stop there. If not, you’re going to need to read laterally, finding out more about this source you’ve ended up at and asking whether it is trustworthy (Read laterally).

And if at any point you fail–if the source you find is not trustworthy, complex questions emerge, or the claim turns out to have multiple sub-claims–then you circle back, and start a new process. Rewrite the claim. Try a new search of fact-checking sites, or find an alternate source (Circle back).

BUILDING A FACT-CHECKING HABIT BY CHECKING YOUR EMOTIONS

In addition to the moves, I’ll introduce one more word of advice: Check your emotions.

This isn’t quite a strategy (like “go upstream”) or a tactic (like using date filters to find the origin of a fact). For lack of a better word, I am calling this advice a habit.

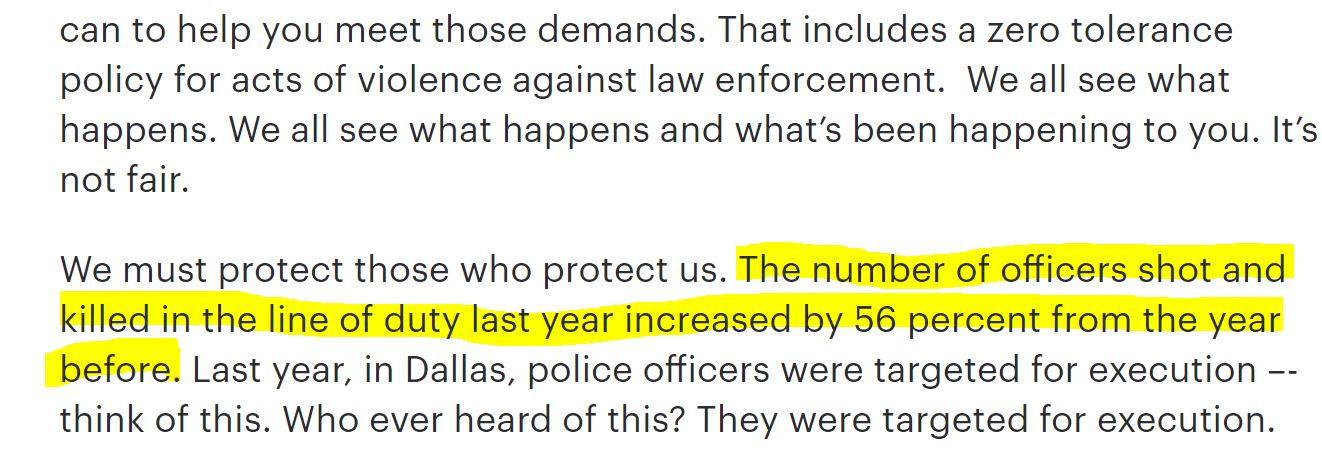

The habit is simple. When you feel strong emotion–happiness, anger, pride, vindication–and that emotion pushes you to share a “fact” with others, STOP. Above all, these are the claims that you must fact-check.

Why? Because you’re already likely to check things you know are important to get right, and you’re predisposed to analyze things that put you in an intellectual frame of mind. But things that make you angry or overjoyed, well… our record as humans are not good with these things.

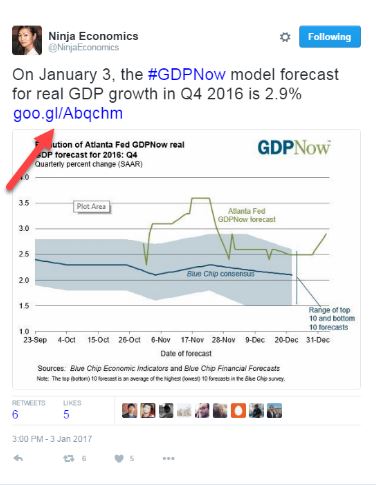

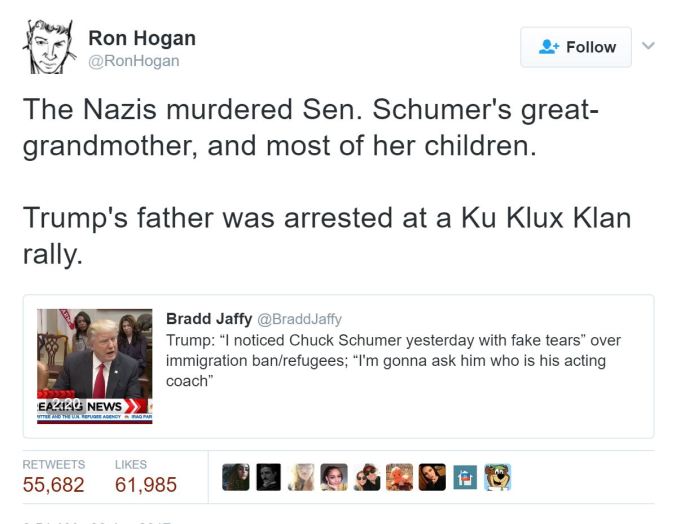

As an example, I’ll cite this tweet that crossed my Twitter feed:

You don’t need to know much of the background of this tweet to see its emotionally charged nature. President Trump had insulted Chuck Schumer, a Democratic Senator from New York, and characterized the tears that Schumer shed during a statement about refugees as “fake tears.” This tweet reminds us that Senator Schumer’s great-grandmother died at the hands of the Nazis, which could explain Schumer’s emotional connection to the issue of refugees.

Or does it? Do we actually know that Schumer’s great-grandmother died at the hands of the Nazis? And if we are not sure this is true, should we really be retweeting it?

Our normal inclination is to ignore verification needs when we react strongly to content, and researchers have found that content that causes strong emotions (both positive and negative) spreads the fastest through our social networks. Savvy activists and advocates take advantage of this flaw of ours, getting past our filters by posting material that goes straight to our hearts.

Use your emotions as a reminder. Strong emotions should become a trigger for your new fact-checking habit. Every time content you want to share makes you feel rage, laughter, ridicule, or even a heartwarming buzz, spend 30 seconds fact-checking. It will do you well.

- See “What Emotion Goes Viral the Fastest?” by Matthew Shaer.