6 Chapter 6: z-scores and the Standard Normal Distribution

Review

We now understand how to describe and present our data visually and numerically. These simple tools, and the principles behind them, will help you interpret information presented to you and understand the basics of a variable. Moving forward, we now turn our attention to how scores within a distribution are related to one another, how to precisely describe a score’s location within the distribution, and how to compare scores from different distributions.

Revisiting Percentiles

In many situations, it is useful to have a way to describe the location of an individual score within its distribution. One approach is the percentile rank. The percentile rank of a score is the percentage of scores in the distribution that are lower than that score. Percentiles are useful for comparing values.

Consider, for example, the distribution of Rosenberg Self-esteem scores we used in chapter 2. For any score in the distribution, we can find its percentile rank by counting the number of scores in the distribution that are lower than that score and converting that number to a percentage of the total number of scores.

| Self-Esteem Scores | Frequency | Cumulative Frequency | Cumulative Percentage |

| 24 | 3 | 40 | 100 |

| 23 | 5 | 37 | 92.5 |

| 22 | 10 | 32 | 80 |

| 21 | 8 | 22 | 55 |

| 20 | 5 | 14 | 35 |

| 19 | 3 | 9 | 22.5 |

| 18 | 3 | 6 | 15 |

| 17 | 0 | 3 | 7.5 |

| 16 | 2 | 3 | 7.5 |

| 15 | 1 | 1 | 2.5 |

Table 1. Frequency table for Rosenburg self-esteem scores

Notice, for example, that five of the students represented by the data in the table had self-esteem scores of 23. In this distribution, 32 of the 40 scores (80%) are lower than 23 (note that you can see for score 22 showing cumulative frequency as 32). Thus, for students with a score of 23, they have a percentile rank of 80 percent. It can also be said that they scored at the 80th percentile. Remember that percentile rank by counting the number of scores in the distribution that are lower than that score and converting that number to a percentage of the total number of scores (32/40 = 80%). Percentile ranks are often used to report the results of standardized tests of ability or achievement. If your percentile rank on a test of verbal ability were 40, for example, this would mean that you scored higher than 40% of the people who took the test.

Normal Distributions

The normal distribution is the most important and most widely used distribution in statistics. It is sometimes called the “bell curve,” although the tonal qualities of such a bell would be less than pleasing. It is also called the “Gaussian curve” of Gaussian distribution after the mathematician Carl Friedrich Gauss. Let’s review a little bit about the normal distribution. The normal distribution is described in terms of two parameters: the mean (which you can think of as the location of the peak), and the standard distribution (which specifies the width of the distribution). The bell-like shape of the distribution never changes, only its location and width. The normal distribution is commonly observed in data collected in the real world, as we have already seen in Chapter 3 — and in chapter 7 we will learn more about why this occurs.

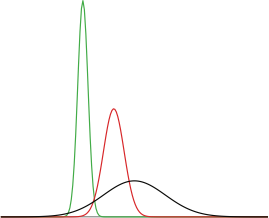

Strictly speaking, it is not correct to talk about “the normal distribution” since there are many normal distributions. Normal distributions can differ in their means and in their standard deviations. Figure 1 shows three normal distributions. The green (left-most) distribution has a mean of -3 and a standard deviation of 0.5, the distribution in red (the middle distribution) has a mean of 0 and a standard deviation of 1, and the distribution in black (right-most) has a mean of 2 and a standard deviation of 3. These as well as all other normal distributions are symmetric with relatively more values at the center of the distribution and relatively few in the tails. What is consistent about all normal distribution is the shape and the proportion of scores within a given distance along the x-axis. We will focus on the Standard Normal Distribution (also known as the Unit Normal Distribution), which has a mean of 0 and a standard deviation of 1 (i.e. the red distribution in Figure 1).

Figure 1. Normal distributions differ in mean and standard deviation.

Features of the normal distribution

- Normal distributions are symmetric around their mean.

- The mean, median, and mode of a normal distribution are equal.

- The area under the normal curve is equal to 1.0 (100%).

- Normal distributions are denser in the center and less dense in the tails.

- Normal distributions are defined by two parameters, the mean (μ) and the standard deviation (σ).

- 68% of the area of a normal distribution is within one standard deviation of the mean.

- Approximately 95% of the area of a normal distribution is within two standard deviations of the mean.

These properties enable us to use the normal distribution to understand how scores relate to one another within and across a distribution. But first, we need to learn how to calculate the standardized scores that make up a standard normal distribution.

z-scores

As we learned in earlier lessons, population mean (µ) and population standard deviation (σ) are methods for describing an entire distribution of scores using individual scores. If the data sets have different means and standard deviations, then comparing the data values directly can be misleading. A z-score is a standardized version of a raw score (x) that gives information about the relative location of that score within its distribution. Z-scores are standardized scores that identify and describe the exact location of every score within a distribution. By transforming our values (raw score) we can compare z-scores across different samples or groups and make meaningful comparisons. Each value in the distribution has a z-score that can be calculated to standardize for comparison.

Let’s say that you received a score of 76 on your Chemistry exam and your friend receives a score of 76 on her Physics exam. Who is doing better in class? It is hard to say because we do not have enough information. This is an example of how z-scores can facilitate meaningful comparisons. The z score for a particular individual is the difference between that individual’s score and the mean of the distribution, divided by the standard deviation of the distribution.

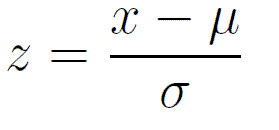

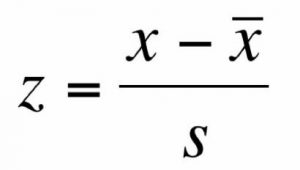

Formulas to calculate Z scores

| Population | Sample |

|

|

Note that it is essentially the same formula where the appropriate symbols for mean and standard deviation have been used depending on if working with population or sample data.

As you can see, z-scores combine information about where the distribution is located (the mean/center) with how wide the distribution is (the standard deviation/spread) to interpret a raw score (x). Specifically, z-scores will tell us how far the score is away from the mean in units of standard deviations and in what direction. Z-scores transform raw scores into units of standard deviation above or below the mean. This transformation provides a reference using the standard normal distribution. If we are given a Z score we know where, relative to the mean, the Z score and raw score lies.

Z-scores & the standard normal distribution

Z-score distribution

- If the Z score is negative, then the score falls below the mean

- If the Z score is 0, then the score falls at the mean

- If the Z score is positive, then the score falls above the mean

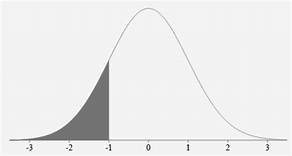

Let’s look at some examples. A z-score value of -1.0 tells us that this z-score is 1 standard deviation (because of the magnitude 1.0) below (because of the negative sign) the mean.

Figure 2. z-score of -1

Similarly, a z-score value of 1.0 tells us that this z-score is 1 standard deviation above the mean. Thus, these two scores are the same distance away from the mean but in opposite directions. A z-score of -2.5 is two-and-a-half standard deviations below the mean and is therefore farther from the center than both of the previous scores, and a z-score of 0.25 is closer than all of the ones before.

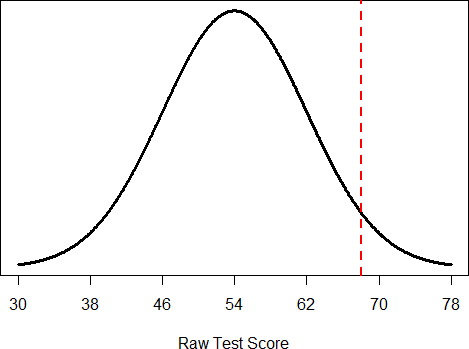

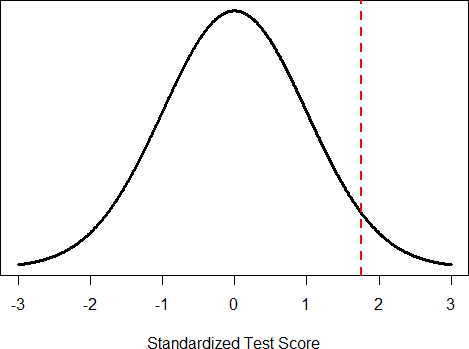

We can convert raw scores into z-scores to get a better idea of where in the distribution those scores fall. Let’s say we get a score of 68 on an exam (X=68). We may be disappointed to have scored so low, but perhaps it was just a very hard exam. Having information about the distribution of all scores in the class would be helpful to put some perspective on ours. We find out that the class got an average score (M) of 54 with a standard deviation (s) of 8. To find out our relative location within this distribution, we simply convert our test score into a z-score.

Figure 3. Raw and standardized versions of a single score

Figure 3 shows both the raw score and the z-score on their respective distributions. Notice that the red line indicating where each score lies is in the same relative spot for both. This is because transforming a raw score into a z-score does not change its relative location, it only makes it easier to know precisely where it is.

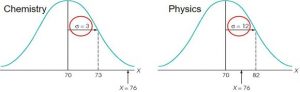

Example comparing raw scores: Comparing exam scores

Let’s go back to our Chemistry and Physics exam score comparisons. Each student received a score of x =76 on the exam. Assume that:

- The average score for both exams is M = 70.

- The standard deviation for Chemistry is s = 3

- The standard deviation for Physics is s = 12.

A z score indicates how far above or below the mean a raw score is, but it expresses this in terms of the standard deviation. The z-scores for our example are above the mean.

- Chemistry z-score is z = (76-70)/3 = +2.00

- Physics z -score is z = (76-70)/12 = + 0.50

When we compare the position of the test score X = 76 it is clear that these two distributions are very different and that the Chemistry score has a higher position in the distribution.

Finally, z-scores are incredibly useful if we need to combine information from different measures that are on different scales. Let’s say we give a set of employees a series of tests on things like job knowledge, personality, and leadership. We may want to combine these into a single score we can use to rate employees for development or promotion, but look what happens when we take the average of raw scores from different scales, as shown in Table 2:

|

Raw Scores |

Job Knowledge (0 – 100) |

Personality (1 –5) |

Leadership (1 – 5) |

Average |

|

Employee 1 |

98 |

4.2 |

1.1 |

34.43 |

|

Employee 2 |

96 |

3.1 |

4.5 |

34.53 |

|

Employee 3 |

97 |

2.9 |

3.6 |

34.50 |

Table 2. Raw test scores on different scales (ranges in parentheses).

Because the job knowledge scores were so big and the scores were so similar, they overpowered the other scores and removed almost all variability in the average. However, if we standardize these scores into z-scores, our averages retain more variability and it is easier to assess differences between employees, as shown in Table 3.

|

z-scores |

Job Knowledge (0 – 100) |

Personality (1 –5) |

Leadership (1 – 5) |

Average |

|

Employee 1 |

1.00 |

1.14 |

-1.12 |

0.34 |

|

Employee 2 |

-1.00 |

-0.43 |

0.81 |

-0.20 |

|

Employee 3 |

0.00 |

-0.71 |

0.30 |

-0.14 |

Table 3. Standardized scores using data from table 2.

Setting the scale of a distribution

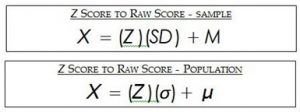

Another convenient characteristic of z-scores is that they can be converted into any “scale” that we would like. Here, the term scale means how far apart the scores are (their spread) and where they are located (their central tendency). In other words, we can convert that value into its original raw score (X) if the mean and standard deviation are known. We can still do this using the Z score formula we have been using so far this lesson. We just need to rearrange the variables so we are solving for X instead of Z.

The formulas for transforming z to x are:

Note: these are just simple rearrangements of the original formulas for calculating z from raw scores.

A problem is that these new z-scores aren’t exactly intuitive for many people. We can give people information about their relative location in the distribution (for instance, the first person scored well above average). Another route we can do is to take the z-scores and transform them to a known distribution, like the traditional IQ distribution.

Let’s say we have z-scores of 1.71, .43, and – .80 after converting their raw intelligence test score to a z-score. We can translate these z-scores into the more familiar metric of IQ scores, which have a mean of 100 and standard deviation of 16. We can use the transforming formula from above: X = z * SD + M

X = 1.71 ∗ 16 + 100 = 127.36, so IQ score of 127

X = 0.43 ∗ 16 + 100 = 106.88, so IQ score of 107

X = −0.80 ∗ 16 + 100 = 87.20, so IQ score of 100

We rounded the values to 127, 107, and 87, respectively, for convenience.

Z-scores and the Area under the Curve

Even though we can use a z-score as a measure of relative standing for any shape of frequency distribution, we commonly use z-scores in this class when discussing normal distributions. They provide a way of describing where an individual’s score is located within a distribution and are sometimes used to report the results of standardized tests.

Z-scores and the standard normal distribution go hand-in-hand. A z-score will tell you exactly where in the standard normal distribution a value is located, and any normal distribution can be converted into a standard normal distribution by converting all of the scores in the distribution into z-scores, a process known as standardization. We will also see that one can identify the percentile for each z-score in a normal distribution.

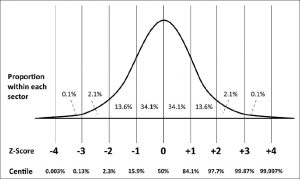

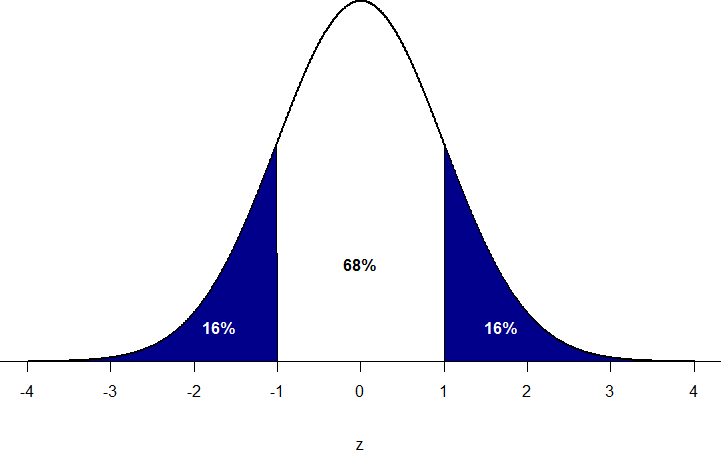

Since a z-score tells us how far above or below the mean a particular raw score lies (in standard deviation units), we can use z-scores in conjunction with the empirical rule. We can use z-scores to simplify the earlier statements we made regarding the Empirical Rule (68-95-99 rule):

- 68% of all scores will fall between a Z score of -1.00 and +1.00

- 95% of all scores will fall between a Z score of -2.00 and +2.00

- 99.7% of all scores will fall between a Z score of -3.00 and +3.00

- 50% of all scores lie above/below a Z score of 0.00

Take a minute to examine Figure 4 to identify these areas. For example, you can see adding up the 2 areas between z = -1 to z = 1, you get 68.2%. Because z-scores are in units of standard deviations, this means that 68% of scores fall between z = -1.0 and z = 1.0 and so on. We call this 68% (or any percentage we have based on our z-scores) the proportion of the area under the curve. Remember, these percentages remain true only if our sample or population is normally distributed!

Figure 4. Z-score indicating percentiles in a standardized normal distribution.

Figure 5. Shaded areas represent the area under the curve in the tails

Additionally, z-scores provide one way of defining outliers. For example, outliers are sometimes defined as scores that have z scores less than −3.00 or greater than +3.00. In other words, they are defined as scores that are more than three standard deviations from the mean. Some researchers will define outliers as greater than 2 standard deviations from the mean.

We will have much more to say about percentiles in a distribution in the coming chapters. As it turns out, this is a quite powerful idea that enables us to make statements about how likely an outcome is and what that means for research questions we would like to answer and hypotheses we would like to test. But first, we need to make a brief foray into some ideas about probability.

Learning Objectives

Having read this chapter, you should be able to:

- Know that a Z-score is telling you how far away any data point is from the mean, in units of standard deviationIdentify

- Identify uses of z-scores

- Compute and transform z-scores and x-values

- Describe the effects of standardizing a distribution

- Identify the z-score location on a normal distribution

Video recap options

- Watch this video (5 min) that explains why we need z-scores (Research By Design, 2016)

- Watch this video (2 min) quick on Z-scores and standard deviation (mathispower4u, 2019)

- Watch this quick video (3 min) connecting z-scores, standard deviation and placement on normal distribution (with percentiles) (Quantitative Specialists, 2014).

Exercises – Chapter 6

- What are the two pieces of information contained in a z-score?

- A z-score takes a raw score and standardizes it into units of.

- Assume the following 5 scores represent a sample: 2, 3, 5, 5, 6. Transform these scores into z-scores.

- True or false:

- All normal distributions are symmetrical

- All normal distributions have a mean of 1.0

- All normal distributions have a standard deviation of 1.0

- The total area under the curve of all normal distributions is equal to 1

- Interpret the location, direction, and distance (near or far) of the following z- scores:

- -2.00

- 1.25

- 3.50

- -0.34

- Transform the following z-scores into a distribution with a mean of 10 and standard deviation of 2: -1.75, 2.20, 1.65, -0.95

- Calculate z-scores for the following raw scores taken from a population with a mean of 100 and standard deviation of 16: 112, 109, 56, 88, 135, 99

- What does a z-score of 0.00 represent?

- For a distribution with a standard deviation of 20, find z-scores that correspond to:

- One-half of a standard deviation below the mean

- 5 points above the mean

- Three standard deviations above the mean

- 22 points below the mean

- Calculate the raw score for the following z-scores from a distribution with a mean of 15 and standard deviation of 3:

- 4.0

- 2.2

- -1.3

- 0.46

- Let’s say we create a new measure of intelligence, and initial calibration finds that our scores have a mean of 40 and a standard deviation of 7. Three people who have scores of 52, 43, and 34 want to know how well they did on the measure. Convert their raw scores into z-scores.

Answers to Odd-Numbered Exercises – Ch. 6

- The location above or below the mean (from the sign of the number) and the distance in standard deviations away from the mean (from the magnitude of the number).

3. X = 4.2, s = 1.64; z = -1.34, -0.73, 0.49, 0.49, 1.10

5. 1. 2 standard deviations below the mean, far

2. 1.25 standard deviations above the mean, near

3. 3.5 standard deviations above the mean, far

4. 0.34 standard deviations below the mean, near

7. z = 0.75, 0.56, -2.75, -0.75, 2.19, -0.06

9. 1. -0.50, 2. 0.25, 3. 3.00, 4. 1.10

11. Z = (52 − 40)/7 = 1.71

Z = (43 − 40)/7 = 0.43

Z = (34-40)/7 = -0.80